Abstract

Objective

Breast cancer is one of the most commonly occurring cancers in women. Thus, early detection and treatment of cancer lead to a better outcome for the patient. Ultrasound (US) imaging plays a crucial role in the early detection of breast cancer, providing a cost-effective, convenient, and safe diagnostic approach. To date, much research has been conducted to facilitate reliable and effective early diagnosis of breast cancer through US image analysis. Recently, with the introduction of machine learning technologies such as deep learning (DL), automated lesion segmentation and classification, the identification of malignant masses in US breasts has progressed, and computer-aided diagnosis (CAD) technology is being applied in clinics effectively. Herein, we propose a novel deep learning-based “segmentation + classification” model based on B- and SE-mode images.

Methods

For the segmentation task, we propose a Multi-Modal Fusion U-Net (MMF-U-Net), which segments lesions by mixing B- and SE-mode information through fusion blocks. After segmenting, the lesion area from the B- and SE-mode images is cropped using a predicted segmentation mask. The encoder part of the pre-trained MMF-U-Net model is then used on the cropped B- and SE-mode breast US images to classify benign and malignant lesions.

Results

The experimental results using the proposed method showed good segmentation and classification scores. The dice score, intersection over union (IoU), precision, and recall are 78.23%, 68.60%, 82.21%, and 80.58%, respectively, using the proposed MMF-U-Net on real-world clinical data. The classification accuracy is 98.46%.

Conclusion

Our results show that the proposed method will effectively segment the breast lesion area and can reliably classify the benign from malignant lesions.

Introduction

Breast cancer is a pressing issue for women’s health, and its incidence rate remains high worldwide [ ]. Early diagnosis and treatment are the keys to addressing breast cancer. Ultrasound (US) imaging is a widely used technology for early breast cancer screening in women because it is safe, non-invasive, and effective in detecting lesions [ ]. The US B-mode provides excellent resolution and contrast, enabling detailed visualization of breast tissue, and has been used for imaging benign and malignant tumors such as tubal lesions, tubal cysts, and breast inflammation [ ]. Many researchers [ ] have used the B-mode US to differentiate between benign and malignant lesions for appropriate patient classification. The researched Computer-aided diagnosis (CAD) facilitates radiologists in image interpretation and diagnosis by providing a second objective opinion. Recently, research in CAD tasks has been conducted using artificial neural networks and US images. Most of the studies [ ] used the B-mode US images in their research to classify lesions. Representatively, Daoud et al. [ ] performed classification by training the B-mode US dataset through a Visual Geometry Group (VGG)-19 network [ ] while pre-training with ImageNet. To increase the accuracy of the B-mode classification, Kalafi et al. [ ] trained the VGG network through two losses: cross entropy (CE) and log hyperbolic cosine (LogCosh). Jabeen et al. [ ] performed feature extraction using pre-trained DarkNet [ ] and then selected the best features through two different optimizations: differential evolution and binary gray golf. The final classification task was performed by mixing them into one feature vector.

While B-mode images have been beneficial, accurate benign/malignant classification may be difficult if the shape or boundary of the tumor in the input image is ambiguous [ ]. Thus, methods utilizing segmentation and classification tasks have been proposed to increase accuracy. Representatively, Yang et al. [ ] predicted the lesion area through attention U-Net in B-mode images [ ] and then cropped the predicted lesion area before performing the classification. Since benign lesions have a regular circular shape compared to irregularly shaped malignant lesions [ ], Inan et al. [ ] utilized this prior knowledge. They segmented the predicted masks through a modified U-Net and classification network, and these predicted binary masks improved the accuracy further.

However, the classification methods mentioned above rely heavily on the accuracy of segmentation networks. If the segmentation network predicts an incorrect lesion area, the outcomes of the subsequent classification network will be inaccurate since they will be trained based on erroneous information. Thus, a multi-task strategy simultaneously performing the segmentation and classification tasks is preferred. Chowdary et al. [ ] performed the segmentation and classification simultaneously through residual-U-Net [ ]. The feature maps extracted through the encoder were divided into two branches for two different tasks: the segmentation task and the classification task. The segmentation task was performed through a decoder branch, and the classification task was accomplished through a fully connected layers branch. By training two tasks simultaneously using this approach, the encoder part can learn by associating the class and shape of the lesion and learn the general characteristics of the lesion data. However, the classification and segmentation tasks have different goals: ‘discrimination between benign and malignant lesions’ and ‘differentiation of the lesion area from the background’ respectively. Thus, when two tasks are trained simultaneously, the encoder part may be biased toward one of the tasks during the training process.

All the methods mentioned above mainly used B-mode images to segment and classify lesions. While B-mode images are widely used, they are only limited to providing morphologic information about the lesion. To improve the accuracy of diagnosis, new ultrasound modes, such as strain elastography (SE) and shear wave elastography (SWE), have been introduced to provide information about the tissue stiffness [ , ]. The SE-mode visualizes tissue stiffness by imaging the tissue deformation through repetitive compression, and the SWE-mode visualizes stiffness by measuring the propagation of shear waves in tissue [ ]. In general, malignant lesions exhibit stiffer characteristics than benign ones. In SE-mode, malignant lesions exhibit lower strain rates compared to benign lesions, while in SWE-mode, malignant lesions demonstrate faster shear wave propagation speeds [ ]. Therefore, combining structural information from the B-mode and tissue stiffness information from the SE/SWE-mode would yield a more accurate lesion diagnosis. In the past, several studies have demonstrated that integrating SWE with B-mode breast ultrasound significantly enhances diagnostic performance. For instance, Berg et al. [ ] showed that adding SWE to B-mode ultrasound improved specificity and positive predictive value, making it a valuable tool for distinguishing benign from malignant lesions. Similarly, Chang et al. [ ] reported that the combination of SWE and B-mode ultrasound increased diagnostic accuracy, reducing unnecessary biopsies in breast lesion evaluation. Recent deep learning methods [ ] have been developed by incorporating multimodal information, combining information from different modes to enhance the diagnosis. Gong et al. [ ] combined the B- and SE-mode breasts US images to differentiate benign from malignant tumors. They used two deep neural networks (DNN) to extract features for each mode, integrated these features into one vector, and then predicted the final class through another DNN. The network had a limitation in that it could not utilize overall spatial information since it only performed the classification task. Misra et al. [ ] performed the classification through a convolution neural network (CNN) using the B- and SE-mode images that cropped the lesion area into a rectangular shape. Similarly, Zhang et al. [ ] segmented regions of interest in the B- and SWE-mode images and performed the classification using CNN. In both these approaches, the cancer lesion was manually recognized and cropped according to the radiologist’s guidance (manual segmentation).

Manually segmenting lesions is tedious and time-consuming, especially given the large data sets required for deep learning studies. Thus, in their subsequent work, Misra et al. [ ] proposed a method to automatically segment cancer regions using both the B- and SE-mode US images and then performed the classification using the segmentation results. They used four encoders to learn the B- and SE-mode images and performed the segmentation through a decoder by applying weights to each encoder. Here, the weights of the four encoders were learned automatically through ResNet18 [ ]. Additionally, the classification was performed as a follow-up task on the cropped images generated from the segmentation masks. In the classification task, features of the same structure from B-mode and SE-mode were extracted through different backbone networks, and these features were then combined through a decision network composed of fully connected layers to predict the final class. However, since the training for the segmentation task was performed through different encoders for each channel, the task requires too much memory storage space. In addition, since the backbone network is separated for each modality in the classification task, the two modalities were not utilized effectively. In the case of Huang et al. [ ], the classification was performed through the single ResNet18 [ ], which shares weights of the four US modes: B-, SE-, SWE-mode, and Doppler. However, since the weights were shared across multiple modes, the network cannot learn the unique characteristics of each mode well.

This paper proposes a “segmentation + classification” method that utilizes both the B- and SE-mode breast US images. As shown in Figure 1 , we first perform the segmentation task, followed by the classification task. We propose a multi-modal segmentation model named Multi-Modal Fusion U-Net (MMF-U-Net) based on the U-Net structure [ ]. Here, the B- and SE-mode images are input to two separate encoders of the same structure using two different input channels. Thus, each encoder is trained by the unique characteristics of the B- and SE-mode. After segmentation, the input data is cropped according to the predicted mask and used as input to the encoder part of MMF-U-Net for the classification. Most existing US classification tasks fine-tune the classification network based on pre-trained weights of the ImageNet [ ]. However, to improve accuracy for the US, we fine-tune the classification network using pre-trained weights of the MMF-U-Net, which is the upstream segmentation network. The main contributions of our work are summarized below:

- 1.

We introduce a fusion module to mix information from different modalities. This mixed information is passed to the B-mode encoder to supplement stiffness information to the morphologic information of B-mode.

- 2.

We propose MMF-U-Net, which uses multi-modality images, to effectively analyze lesions with ambiguous boundaries.

- 3.

We design a model that can perform segmentation and classification tasks with a much smaller number of trainable parameters compared to existing methods.

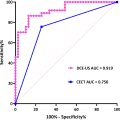

The DL-based method improves upon traditional methods by automatically learning complex patterns and integrating multi-modal features, resulting in enhanced diagnostic accuracy. It also reduces inter-operator variability, offering more consistent and objective evaluations compared to conventional approaches. Especially, with our proposed MMF-U-Net, we obtained dice score and intersection over union (IoU) scores of 79.23% ± 0.28% and 68.60% ± 0.48%, respectively. Additionally, when the encoder part with the same structure was used as the feature extractor for the classification, the achieved diagnostic accuracy was 98.46% ± 3.08%, and all patients with malignant lesions were correctly diagnosed. We present our methodology, results, and discussions in the following sections.

Methodology

Dataset

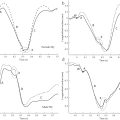

The dataset used in this study was obtained from clinical breast ultrasound examinations performed between August 2016 and February 2017 at Western Reserve Health Education, Youngstown, Ohio, USA. Approval for the study was obtained from the Institutional Review Board (IRB) and aligned with the regulations of the Health Insurance Portability and Accountability Act (HIPAA). Images were taken using Hitachi’s HI-VISION Ascendus system with an 18–5 MHz linear EUP-L75 probe, and consist of paired B-mode and SE-mode images. Although SE is known to be operator-dependent, we minimized variability by using a standardized imaging protocol and ensuring data acquisition by experienced sonographers. Despite its limitations, SE remains a feasible option in clinical practice due to its lower cost and compatibility with standard ultrasound equipment, making it a practical alternative to SWE in many settings. A total of 73 patients participated, and 211 sets of dual-modal ultrasound images were collected. The dataset primarily consists of two-four pairs of B-mode and strain images per patient, with some cases having fewer (one pair) or more (up to six or seven pairs). Detailed information regarding the number of images per patient is provided in the supplementary material ( Supplementary Table 1 ). The ground truth segmentation labels were generated through a manual segmentation process. A first expert conducted a detailed contouring of the lesion boundaries. A second expert then reviewed and manually edited these contours as necessary. No merging of contours was conducted. For cases where the second expert noted ambiguities, both experts discussed and agreed on a final segmentation. This approach aims to increase accuracy but does not involve independent, simultaneous segmentation by multiple experts. The dataset contains 113 benign sets for 37 patients and 98 malignant sets for 36 patients.

Data preprocessing

In particular, the SE-mode images were recorded with the B-mode images to visualize the underlying structures. These raw images also contain undesired color bars and other markers unrelated to the tissue. Before the raw data was used for training, we performed preprocessing to remove undesired markers. Figure 2 shows the total flow of the dataset preprocessing. First, the B- and SE-mode images were obtained as raw data. Next, we separated the B- and SE-mode from the raw data by specifying the region of interest. Marker colors were designated from the images to remove markers from B- and SE-mode images and marked as black. For example, yellow circular marks have a pixel value of (132, 132, 0), and a mouse cursor and scale indicators have a pixel value of (177, 177, 177). We detected the markers with these specific values and changed all RGB values of the corresponding pixels to 0. After changing all the markers to black, the average value of non-zero values around the black pixel was calculated, and a new RGB value was assigned. We trained the network using these images with the undesired marks erased.

Proposed network

The proposed network is shown in Figure 3 . It consists of an upstream task for the segmentation and a downstream task for the classification. MMF-U-Net is used for the upstream segmentation task, and for the downstream classification task, the encoder part of the MMF-U-Net is used.

Segmentation model

The proposed MMF-U-Net is based on the U-Net, the most widely used CNN model for various medical imaging tasks [ ]. The U-Net has two paths: the encoder path, called the contracting path, and the decoder path, called the expansive path. The encoder part applies 3 × 3 convolution, batch normalization, and ReLU. The down-sampling to half size is then performed through a 2 × 2 max pooling operation to increase the receptive field of the network gradually. This encoder captures contextual information. In the decoder part, the up-sampling of the feature map is performed with a 2 × 2 convolution, followed by the process similar to that of the encoder: 3 × 3 convolutions, batch normalization, and ReLU are repeated. In addition, a skip connection is applied at every step from the encoder to the decoder to preserve features at the same resolution. Such an approach allows the decoder to generate the segmentation results using local information from the original image and high-level features. As shown in Figure 3 a, the MMF-U-Net network consists of two encoders and one decoder. The two encoders have the same structure except for the input channel. The encoder corresponding to the B-mode path has input channel 1, and the SE-mode encoder has input channel 3. Each encoder consists of five convolution blocks. Each convolution block contains two 3 × 3 convolutions and ReLU activation function. What makes this network novel compared to existing methods is that the feature maps of the two encoders that have gone through the convolution block are mixed through a fusion block. They perform element-by-element summation in the B-mode.

Inspired by the TSNet [ ], we propose the fusion block module that mixes the B- and SE-mode features. The fusion block is structured as shown in Figure 3 b and is formulated as follows:

Flf=fl1([FlB∥FlSE])

Flout=Flf⊙σ1(fl2(Flf))

F∼lf=fl1([FlB∥FlSE])

F l B

and <SPAN role=presentation tabIndex=0 id=MathJax-Element-5-Frame class=MathJax style="POSITION: relative" data-mathml='FlSE’>????FlSE

F l S E

are <SPAN role=presentation tabIndex=0 id=MathJax-Element-6-Frame class=MathJax style="POSITION: relative" data-mathml='lth’>??ℎlth

l t h

level feature maps obtained through the convolution block of the B- and SE-mode images, respectively. The operation symbols <SPAN role=presentation tabIndex=0 id=MathJax-Element-7-Frame class=MathJax style="POSITION: relative" data-mathml='∥’>∥∥

∥

and <SPAN role=presentation tabIndex=0 id=MathJax-Element-8-Frame class=MathJax style="POSITION: relative" data-mathml='⊙’>⊙⊙

⊙

mean concatenation and element-wise multiplication, respectively. The function <SPAN role=presentation tabIndex=0 id=MathJax-Element-9-Frame class=MathJax style="POSITION: relative" data-mathml='fli(·)’>???(·)fli(·)

f l i ( · )

refers to the <SPAN role=presentation tabIndex=0 id=MathJax-Element-10-Frame class=MathJax style="POSITION: relative" data-mathml='ith’>??ℎith

i t h

convolution and batch normalization operation, and two <SPAN role=presentation tabIndex=0 id=MathJax-Element-11-Frame class=MathJax style="POSITION: relative" data-mathml='fli(·)’>???(·)fli(·)

f l i ( · )

are performed in one fusion block. Lastly, <SPAN role=presentation tabIndex=0 id=MathJax-Element-12-Frame class=MathJax style="POSITION: relative" data-mathml='σ1(·)’>?1(·)σ1(·)

σ 1 ( · )

represents the sigmoid function. We concatenate <SPAN role=presentation tabIndex=0 id=MathJax-Element-13-Frame class=MathJax style="POSITION: relative" data-mathml='FlB’>???FlB

F l B

and <SPAN role=presentation tabIndex=0 id=MathJax-Element-14-Frame class=MathJax style="POSITION: relative" data-mathml='FlSE’>????FlSE

F l S E

feature maps and then mix them through a 3 × 3 convolution operation to calculate <SPAN role=presentation tabIndex=0 id=MathJax-Element-15-Frame class=MathJax style="POSITION: relative" data-mathml='Flf’>???Flf

F l f

. Another 3 × 3 convolution operation, batch normalization, and sigmoid are performed to estimate the weights. The grid attention method is applied to <SPAN role=presentation tabIndex=0 id=MathJax-Element-16-Frame class=MathJax style="POSITION: relative" data-mathml='Flf’>???Flf

F l f

fusion feature map through the element-wise multiplication with these weights. After using the sigmoid function, weights have values between 0 and 1. Lastly, since the B-mode image visualizes the overall structure, <SPAN role=presentation tabIndex=0 id=MathJax-Element-17-Frame class=MathJax style="POSITION: relative" data-mathml='Flout’>?????Flout

F l o u t

performs element-wise summation into the B-mode path, as per eqn. (3) . Through these operations, the B-mode encoder receives mixed information from both B- and SE- imaging modes.

The decoder part is similar to the existing U-Net. For our method, we modified the attention gate introduced in Att-U-Net [ ] and used it to emphasize features. We used it only to enhance B-mode features since B-mode visualizes the structure of the lesions much better than SE-mode. Figure 3 c shows this attention gate and its operation are as follows:

Flg=fl(FlB+ft(Fl+1B))

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree