Abstract

Objective

Deep learning approaches such as DeepACSA enable automated segmentation of muscle ultrasound cross-sectional area (CSA). Although they provide fast and accurate results, most are developed using data from healthy populations. The changes in muscle size and quality following anterior cruciate ligament (ACL) injury challenges the validity of these automated approaches in the ACL population. Quadriceps muscle CSA is an important outcome following ACL injury; therefore, our aim was to validate DeepACSA, a convolutional neural network (CNN) approach for ACL injury.

Methods

Quadriceps panoramic CSA ultrasound images (vastus lateralis [VL] n = 430, rectus femoris [RF] n = 349, and vastus medialis [VM] n = 723) from 124 participants with an ACL injury (age 22.8 ± 7.9 y, 61 females) were used to train CNN models. For VL and RF, combined models included extra images from healthy participants ( n = 153, age 38.2, range 13–78) that the DeepACSA was developed from. All models were tested on unseen external validation images ( n = 100) from ACL-injured participants. Model predicted CSA results were compared to manual segmentation results.

Results

All models showed good comparability (ICC > 0.81, < 14.1% standard error of measurement, mean differences of <1.56 cm 2 ) to manual segmentation. Removal of the erroneous predictions resulted in excellent comparability (ICC > 0.94, < 7.40% standard error of measurement, mean differences of <0.57 cm 2 ). Erroneous predictions were 17% for combined VL, 11% for combined RF, and 20% for ACL-only VM models.

Conclusion

The new CNN models provided can be used in ACL-injured populations to measure CSA of VL, RF, and VM muscles automatically. The models yield high comparability to manual segmentation results and reduce the burden of manual segmentation.

Introduction

Quadriceps femoris anatomical cross-sectional area (CSA) is an important outcome measure to quantify muscle size and understand neuromuscular function in healthy and knee-injured populations [ , ]. Panoramic ultrasound provides reliable and accessible measurements of muscle CSA [ , , ]. However, obtained ultrasound images are generally manually segmented, which is time consuming and creates variability in results due to its user-dependency.

Automation of image segmentation can enable large volumes of images to be analyzed in a reliable and efficient way, and reduce variability in segmentations [ , ]. Semiautomated and automated deep learning algorithms are commonly being used to automate the processing of medical images in a variety of medical fields [ ]. Automation of the image processing can increase efficiency of the muscle ultrasound imaging process and may encourage more clinicians and researchers to use the technology, as it replaces the monotonous task of image tracing. Although the ultrasound imaging is still a user-dependent process, automatic segmentations can decrease the variability in the analysis phase and increase the overall robustness of the measurement. This could further be implemented in the ultrasound devices themselves and may provide real-time CSA values to the users, which could be extremely useful for clinicians. DeepACSA, using convolutional neural networks (CNN), offers fast and objective automatic segmentation of vastus lateralis (VL) and rectus femoris (RF) heads of quadriceps femoris muscle panoramic ultrasound images comparable with manual segmentation in healthy people [ ]. With a fully automated approach, DeepACSA also reduces subjectivity and rater-bias when compared to other semiautomated approaches, and is freely available online [ , , ]. However, DeepACSA is developed using muscle ultrasound images from people with no known knee or muscle injuries. Therefore, its usability on images from people with different knee conditions is unknown, considering that quadriceps muscle function, structure and quality may change in clinical populations, such as those following anterior cruciate ligament (ACL) injury or surgery (ACLR), patellofemoral pain and knee osteoarthritis [ , ].

ACL injury and ACLR surgery causes persistent deficits in the quadriceps femoris muscle function, muscle weakness being the most evident [ ]. This quadriceps weakness may decrease overall quality of life, [ , ] alter movement patterns, [ , ] decrease functional performance, [ ] and contribute to post-traumatic knee osteoarthritis onset [ ]. A decrease in muscle CSA (i.e., atrophy) contributes to muscle weakness and may explain more than 30% of variance in muscle strength following ACLR [ ]. Therefore, increasing muscle size and strength, is a primary focus of ACL rehabilitation [ ]. Measuring muscle size is also important to identify these deficits, track changes in muscle size over time, and investigate the effectiveness of different interventions on muscle atrophy. Panoramic ultrasound enables the measurement of muscle CSA in both research and clinical settings in a quick and reliable way [ , ]. Therefore, it is commonly used to examine the quadriceps muscle CSA of ACL patients throughout rehabilitation [ , ]. Incorporating automated approaches, such as DeepACSA, into this process would facilitate faster analysis of the ultrasound data in ACL research settings. It would also enable a quicker examination time of muscle CSA for ACL patients in the clinical settings.

Altered muscle quality resulting from ACL injury may affect the ability of the automated image segmentation approaches (e.g., DeepACSA) to accurately find muscle borders (e.g., affect its validity). Therefore, CNN models specifically developed using ultrasound images from people with an ACL injury are needed. Also, the DeepACSA models provided are only for VL and RF muscles [ ] and a model for vastus medialis (VM) is not yet available. Having a VM model is important as it is one of the most commonly measured muscles in populations with knee conditions [ ].

Therefore, we aimed to train CNN models with quadriceps femoris (RF, VL, and VM) panoramic ultrasound images of people with an ACL injury and reconstructive surgery, and establish the validity of different CNN models to automate quadriceps muscle CSA segmentation in this population. Our objectives were: (1) to train one CNN model for each quadriceps femoris muscle head (RF, VL, and VM) using ACL injured and reconstructed images only, (2) to train CNN models using images from a combination of healthy and ACL injured and reconstructed people (for RF and VL muscles), and (3) to compare the performance of the ACL only model, the combined model, and the original DeepACSA model in an unseen external validation dataset of images.

Methods

This study developed models for VL, RF, and VM muscles using muscle images from ACL-injured participants. In addition to the ACL models, data from the previously published DeepACSA work, which included muscle images from healthy participants only, were used to develop separate models with the ACL-injured and healthy images combined. This was done to identify the best-performing models for clinical use. We then evaluated the external validation of these models using a set of muscle images from ACL-injured participants.

Ultrasound images for ACL models

A total of 124 participants with an ACL injury (age 22.8 ± 7.9 y, 61 females and 63 males) went through ultrasound assessment of the quadriceps femoris muscle before and 2 months after reconstructive surgery (ACLR). Presurgery data was collected a median of 50 (interquartile range: 34 to 74) days after ACL injury and postsurgery data was collected a median of 64 (interquartile range: 51 to 93) days after ACLR surgery. All participants received information about the procedures, risks and benefits of the study and signed an informed consent form approved by the university ethics committee prior to any data collection.

Bilateral ultrasound images (one to three images for each muscle) were collected for VL, RF, and VM muscles using GE Logiq e BT11 (GE Ultrasound, Chicago, Illinois, U.S) with a 39 mm linear-array probe. All ultrasound data were collected in panoramic brightness mode (12 MHz; gain, 50; depth, VL 6 cm, RF 4.5 cm, VM 7 cm). Participants laid supine with their knees slightly flexed (15°) and supported on a padded treatment bed, so that they could maintain the same position with a relaxed quadriceps femoris muscle. The images for VL were collected at 50% of the thigh, defined as the distance from the anterior superior iliac spine to lateral border of the knee joint. RF data were collected at 50% of the thigh, defined as the distance from the anterior superior iliac spine to the superior border of the patella. Finally, VM images were acquired at 80% of the distance from the anterior superior iliac spine to the medial border of the knee joint. The transducer was aligned in the transverse plane to collect the panoramic images of the resting muscles. The probe was anatomically perpendicular to the muscle. All images were collected using the same methods for all the participants. All images were collected with careful consideration to not compress the skin and underlying muscle.

Ultrasound images from healthy participants

All the healthy data used in this study are from the original DeepACSA [ ]. Healthy data were collected from 153 participants (age = 38.2 years, range: 13–78) as described previously [ ]. Briefly, participants laid in a supine position with their legs fully extended and feet on the bed. The images were acquired at rest in 10% increments from 30% to 70% of femur length, from the lateral femur condyle to the trochanter major for RF and VL using brightness mode panoramic ultrasonography in three devices (ACUSON Juniper, linear-array 54 mm probe, 12L3, Acuson 12L3, SIEMENS Healthineers, Erlangen, Germany; Aixplorer Ultimate, linear-array 38mm probe, Superline SL10-2, SuperSonic Imagine, Aix-en-Provence, France; Mylab 70, linear array 47 mm probe, Esaote Biomedica, Genova, Italy). A total of 602 ultrasound images of the RF and 634 ultrasound images of the VL were collected.

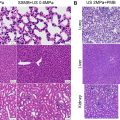

Model training for ACL models

The same model training methodology as DeepACSA was employed in this study [ ]. Images from 99 ACL-injured participants (age 23.2 ± 8.2, 46 females and 53 males) were used at the model training stage and the remaining data from 25 ACL-injured participants (age 21.4 ± 6.2, 15 females and 10 males) were used to externally validate the trained models. A combination of pre-ACLR and post-ACLR images were used for model training (VL n = 430, RF n = 349, and VM n = 723), as there are changes in muscle structure and quality as a result of injury at pre-ACLR and further changes as a result of reconstruction surgery at post-ACLR. All images were manually segmented and labeled by digitizing the CSA of each muscle using the polygon tool in FIJI (distribution of the ImageJ software, US National Institutes of Health, Bethesda, Maryland, USA) [ ] ( Fig. 1 ). Images and masks in the training sets of all muscles were augmented using height and width shift, rotation, and horizontal flipping, and resized to 256 × 256 pixels. A random 90/10% training/internal validation data split within the training data set was applied during model training.

Sematic segmentation was performed with two classes (CSA and background) on preprocessed ultrasound images. The image-label pairs ( Fig. 1 ) served as input for the models. We used a combination of a VGG16 encoder [ ] and a U-Net decoder which has demonstrated good performance when trained on a low number of images [ ] and because of the U-Net’s ability to handle small datasets comparably well [ ]. The model outputs a pixelwise binary label; thus, every pixel of an image is predicted to belong to one of two possible classes. As a result, a 512-dimensional abstract representation of the input image is generated. An NVIDIA RTX A2000 GPU (NVIDIA, Santa Clara, CA) was used for model training with a defined maximum of 60 epochs and a batch size of one. The learning rate was reduced by a factor of 0.001 after 10 epochs of loss stagnation. Binary cross entropy was used as a loss function. During training, model performance was evaluated using the intersection-over-union (IoU) measure to test the overlap between manually segmented muscle area and labels predicted by the respective model. IoU is defined as where 0 ≤ IoU (A, B) ≤ 1, A describes the ground truth (manually segmented muscle area), and B is the model prediction. The IoU is maximal if the ground truth A and model prediction B are the same and minimal if A and B have no overlap.

Details of the network architecture and training can be found in the original DeepACSA article [ ]. The DeepACSA code is written in Python using Keras Tensorflow for model training. The code and trained models from the project are openly available at https://github.com/PaulRitsche/DeepACSA.git . The ACL models developed in this study are also available at ( https://github.com/ORBLab-umich ).

ACL-only and combined models

In addition to the ACL models, separate models were developed using both ACL-injured and healthy muscle images. Two separate models were trained for VL and RF muscles, one including only ACL images (ACL-only), and one including both ACL and healthy images from the original DeepACSA (Combined). Healthy images were available only for RF ( n = 602) and VL ( n = 634) muscles (from adolescent males: n = 50, 16.4 yr [13 to 17 yr]; adults: n = 83 [25 females, 58 males], 26.9 yr [18 to 40 yr]; elderly males: n = 10, 71.5 yr [65 to 78 yr]); therefore, only one VM model was trained in this study, including ACL muscles only. This resulted in five new models trained with different number of images; VL-combined ( n = 1064), VL-ACL-only ( n = 430), RF-combined ( n = 951), RF-ACL-only ( n = 349), and VM-ACL-only ( n = 723).

External validation

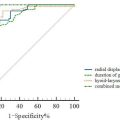

Images from a second group of ACL-injured participants ( n = 25, age 21.4 ± 6.2, 15 females and 10 males) were used for external validation purposes. These images were not used in model training, so were unseen to the models. A total of 100 images for each muscle group (VL, RF, and VM) were selected, equally representing limbs (ACL vs. non-ACL) and different time-points (pre- and post-ACLR). This resulted in including four images from each participant, one image from each pre- and post-ACLR time-points, for both ACL and non-ACL limbs. This set of images were used to see the accuracy of each model, including the original DeepACSA models trained with healthy images only (for VL and RF). For VM, as there is only one model which was developed in this study, this set of images was used to see its external validity only without any model comparisons. All images were run through the models and first checked for correct and erroneous prediction percentages. This was done by visual inspection of input image and model prediction output ( Fig. 2 ). If the muscle borders were not similar to what would be used during manual segmentation, the prediction was decided to be erroneous. Results of the automatic segmentations were then compared to the manual segmentation results. The comparisons were also repeated after the erroneous predictions were removed from the analysis, as these predictions would ideally not be used in practice. The comparisons were done using SPSS (Version 24; IBM Corp) through ICC, 2-way mixed-effects model with absolute agreement, standard error of measurement (SEM), and standardized mean difference (SMD) [ ]. Bland–Altman analysis were used to test the agreement between the automated and manual analysis results, plotting the means of differences between the two measures and showing limits of agreement in which 95% of differences between the measures would be expected to be [ ].

Results

Training performance results of the selected models on the internal validation set are displayed in Table 1 .

| VL | RF | VM | ||||

|---|---|---|---|---|---|---|

| IoU | Accuracy | IoU | Accuracy | IoU | Accuracy | |

| Combined model | 0.995 | 0.975 | 0.996 | 0.981 | N/A | N/A |

| ACL-only model | 0.996 | 0.980 | 0.996 | 0.982 | 0.995 | 0.977 |

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree