Machine learning is an important tool for extracting information from medical images. Deep learning has made this more efficient by not requiring an explicit feature extraction step and in some cases detecting features that humans had not identified. The rapid advance of deep learning technologies continues to result in valuable tools. The most effective use of these tools will occur when developers also understand the properties of medical images and the clinical questions at hand. The performance metrics also are critical for guiding the training of an artificial intelligence and for assessing and comparing its tools.

Key points

- •

There has been and will continue to be rapid advances in deep learning technology that will have a positive impact on medical imaging.

- •

It is critical to understand the unique properties of medical images and the performance metrics when building a training set and training a network.

- •

Medical images have some unique properties compared to photographic images which require adaptation of models built for photographic imaging.

Medical images

An important starting point in the application of machine learning and deep learning, particularly for those unfamiliar with medical imaging, is to recognize some of the special properties of medical images. Compared with photographic images, medical images typically have lower spatial resolution but higher contrast resolution. For instance, MR images typically are 256 × 256 and computed tomography (CT) images are 512 ×512. CT images have at least 12 bits of grayscale information, which is more than what the eye can perceive; therefore, perceiving all the information present in a CT image requires that multiple contrast and brightness settings be used. In the radiology world, these are referred to as the window width and window center. Essentially, the intensity ranges from center—width/2 up to the center + width/2. CT images have a constant intensity meaning, such that water has a value of 0 and air is −1000. And the units are Hounsfield units, honoring the inventor of the CT scanner, Sir Godfrey Hounsfield. On the other hand, other medical imaging types typically do not have a reproducible intensity scale; therefore, intensity normalization is a critical early step in the image processing pipeline.

It was recognized in the late 1980s that an open standard for representing and transmitting medical images was critical to the advance of the medical imaging sciences. That led to the development of the American College of Radiology (ACR) National Electrical Manufacturer Association (NEMA) standard, which subsequently became known as Digital Imaging and Communications in Medicine (DICOM) rather than ACR-NEMA, version 3. DICOM images consist of a header and a body, where the actual pixels of the image are the body. The header consists of several keys and values, where the keys are a set of standard and coded tags and the values are encoded in a prescribed way. DICOM tags typically are referred to as having a group and an element, each consisting of 4 hexadecimal digits. The NEMA Web site contains the entire dictionary of legal DICOM tags. Thaicom does permit manufacturers to insert proprietary information in any tag where the group number is an odd number. This allows storage of information of interest to the image generator and/or not yet standardized by DICOM.

How images are stored

The original focus of DICOM was focused on the transfer of image data between 2 devices; therefore, a file format was not included in the original specification. Even today, much of the DICOM standard focuses on transfer of the image data rather than storage. There is a DICOM standard, however, for how image data should be stored, which essentially is a serialization of the header and body. Most of the time, each 2-dimensional image is stored as a separate DICOM file although standards do exist for both multidimensional and multi–time point images. Adoption of these letter formats has been slow.

Particularly before these multidimensional formats existed, medical imaging researchers developed their own formats for storing images. One of the early popular formats is referred to as the analyze , format. it had 1 file for the header information, which described the image data, whereas the other file was the actual pixel data. The Neuroimaging Informatics Technology Initiative (NIfTI) format extends the analyze format to provide more information in the header and also to join the 2 components into 1 file. There are other formats, such as mhd and nrrd, which are similar to NIfTI and supported by some specific software packages.

Elements of machine learning

Features

A machine learning system requires features that are numerical values computed from the image(s). When several such values for 1 example are put together, they are called a feature vector. For a system to learn, it must be given the answer for each of these examples, and it must be given a reasonable number of examples. The number required depends on how strong the signal is in the features as well as the machine learning method used.

Features are the real starting point for machine learning. In cases of medical images, features may be the actual pixel values, edge strengths, variation in pixel values in a region, or other values computable from the pixels. Nonimage features, such as the age of the patient and whether a laboratory test has positive or negative results, also can be used. When all these features are combined for an example, this is referred to as a feature vector or input vector.

Feature engineering

Although these features might make it sound like the native pixel values simply could be used as the features, this actually is rare. The intensity often is 1 part of the vector, but other features, such as edge strength, regional intensity, regional texture, and many others, routinely are used. Determining what should be used and how to calculate those from medical images is feature engineering. Good feature engineering requires knowledge of the medical image properties (eg, how to normalize intensities and whether pixel dimensions are fixed, calculable, or unknown) and knowledge of image processing algorithms that can extract the features likely to be useful.

Feature reduction

In general, machine learning benefits from having more data for each example (as well as having more examples) in order to learn the task. It also is the case, however, that including features that either do not help in making the prediction (the feature is not informative) or that overlap with other features can result in poorer performance. Therefore, it generally is desirable to remove noncontributory features and also those that do not contribute significantly, a process known as feature reduction and as feature selection. Feature reduction has the additional benefit of reducing the computational cost at inference time.

There are 3 categories of methods used to achieve feature reduction: filter methods, wrapper methods, and embedding methods. Filter-based methods use some metric to determine how independently predictive a given feature is, and those features that are most predictive while also being independent of others are selected. Pearson correlation and chi-square are 2 popular filter methods.

Wrapper methods search for those features that result in the least reduction in performance when some feature is removed. As a wrapper method proceeds, it continually tries removing features, removing those that either are not predictive or that overlap significantly with other features.

Some learning methods have feature reduction built into them, and thus the term, embedded . Examples of embedded methods include lasso and random forests, where the process of training includes removal of features that do not significantly improve performance.

Machine Learning Models

Before discussing the actual machine learning techniques, the use of the term model should be addressed. A model can refer to the general shape of the machine learning method, such as a decision tree or support vector machine (SVM), and also to the specific form of a deep learning network, such as ResNet50 or DenseNet121. Model also can refer to the trained version of the machine learning tool, so readers must infer from context which meaning of model is meant by an investigator. Because this article does not describe any trained versions, model always refers to the architecture and not to a trained version.

Logistic regression

Logistic regression is a well-established technique which, despite its name, is used more generally as a classifier. Logistic regression models have a fixed number of parameters that depend on the number of input features, and they output categorical prediction. It is similar to linear regression, where several points are fitted to a line, minimizing a function like the mean squared error (MSE). Logistic regression instead fits the data to a sigmoid function from 0 to 1, and, when the output is less than 0.5, the example is assigned to a class, else it is the other.

Decision trees and random forests

Decision trees get their name because they make a series of binary decisions until they make a final decision. In the simplest case, a range of values is tried and the best threshold is the one that gets the most cases right. Just 1 such branch usually is too simple for practical uses. The training process consists of determining which feature to make a decision on (eg, age of the subject) and what the criterion is (eg, Is age >68?). The metric used for selecting the feature usually is the Gini index or the entropy (also called information gain) for categorical decision trees or the mean error or MSE for regression trees. These metrics all focus on finding the feature that improves the most in making a prediction. Once that feature is determined, the threshold/decision criteria are computed. This process of finding the feature and criterion is applied recursively to each of the groups that result from applying the split until some stopping criterion is met (eg, no more than X decisions or until there are fewer than Y examples after a decision is applied).

An important advantage of decision trees is how easy they are to interpret. Although other machine learning models are close to black boxes, decision trees provide a graphical and intuitive way to understand what the ML model does.

Support vector machines

SVMs are based on the idea of finding a hyperplane that best divides the set of training examples into 2 classes. Support vectors are the examples nearest to the hyperplane, the points of a data set that, if removed, would alter the position of the dividing hyperplane. A hyperplane is a line that linearly separates and classifies a set of data. The goal then is to determine the formula for a plane that best separates the examples. This is called a hyperplane because the dimensionality of the plane is the dimension of the examples (and remember each example is a vector of features). It is common to remap the points from simple n-dimensional space to a different type of space if that can produce a better separation of points. There also are hyperparameters (a variable that is external to the model and whose value cannot be estimated from data) that have an impact on how a model develops. For instance, in cases of SVMs, a penalty must be assigned to an example that is on the wrong side of the decision plane. The hyperparameter is the weighting of that penalty—the weighting of no examples really wrong (therefore, assigning a high power to the error) versus fewer examples wrong, even if those are really wrong.

Neural networks

Neural networks get their name because they are modeled on how it is believed the neurons of the brain work. A neural network has an input layer, an output layer, and a variable number of middle (hidden) layers. The input layer of course receives the example vector—each entry in the vector goes to one neuron (hereafter referred to as a node). Each node then applies an activation function, similar to how a biological neuron fires given a strong enough input. The output of each node then is passed to every node of the next layer, but there is a weight for each of these connections that alters the strength of that signal. Each node in this layer applies its activation function and in turn passes their outputs to the next layer after applying a weight. This continues until the output layer. Although earlier versions used sigmoidal functions because they were similar to biological neurons, it is common to use simpler functions like rectified linear functions (eg, values below a threshold become 0, and values above the threshold are passed through).

Although the activation function is predetermined, the weights are actively learned. A common way those weights are learned (that is, altered until the network makes good predictions) is by applying back propagation. Back propagation repeatedly adjusts the weights of the connections in the network so as to minimize a measure of the difference between the actual output vector of the net and the desired output vector. When the prediction is very wrong and getting worse compared with prior example, the weights are altered more and in the opposite direction, and, when the prediction is getting better, the weights are altered less and in the same direction as before. An important challenge is deciding which weights should be adjusted, because adjust all weights probably should not be adjusted the same amount.

Deep learning

As discussed previously, neural networks are a machine learning technique that has been around for many years, but they were never successful. Attempts to have more than 1 hidden layer resulted in not just significant computing demands, but algorithmic challenges in how to update the weights. As a result, they fell into disfavor with SVMs and decision tree methods becoming much more popular.

Some scientists did continue to work on them, however, and made a splash in 2012 when they crushed the competition with their deep network system. , There appear to be a few factors that came together that contributed to this accomplishment. Although computers were benefiting from Moore’s law, deep learning in particular benefitted from speed improvements much greater than Moore’s law because the deep learning computations were mapped onto graphical processing units, which had hundreds to thousands of cores, versus the 4 to 8 cores present in a typical central processing unit. Perhaps more important were theoretic advances that made these calculations work. These included better back propagation methods, which was a critical element of updating the weights in a multilayer neural network. It also was the combination of convolutional layers with the traditional neural network as well as other specialized layers that also made a difference.

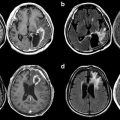

Convolutional Neural Networks

These first winning deep learning networks were convolutional networks, and they still enjoy much success today, albeit with some modifications. Although there are many variants of convolutional neural networks (CNN)s , such as AlexNet, VGGNet, GoogLeNet, and so on, all of these start with the first layers consisting of convolutions that are passed over the image. The size of the kernel varies, although more modern architectures usually use 3 × 3 kernels. The next layer after the convolution is a pooling layer, where the output of the kernel typically is reduced in size (eg, a 2 × 2 is reduced to a single pixel), most often by using the maximum value of the output is taken (MaxPool). This reduced resolution image then has another kernel applied, again followed by a MaxPool. These layers typically are finding low-level features (eg, lines and edges) in early layers and as pooling reduces resolution and combines these low-level features together to find higher-level features (eg, complete circles, eyes, or noses). After a few of these convolution and pooling layers, the output typically is flattened to a 1-dimensional vector, and that vector then is passed into a fully connected network—the familiar neural network structure ( Fig. 1 ).