Abstract

Ultrasound (US) images have the advantages of no radiation, high penetration, and real-time imaging, and optical coherence tomography (OCT) has the advantage of high resolution. The purpose of fusing endometrial images from optical coherence tomography (OCT) and ultrasound (US) is to combine the advantages of different modalities to ultimately obtain more complete information on endometrial thickness. To better integrate multimodal images, we first proposed a Symmetric Dual-branch Residual Dense (SDRD-Net) network for OCT and US endometrial image fusion. Firstly, using Multi-scale Residual Dense Blocks (MRDB) to extract shallow features of different modalities. Then, the Base Transformer Module (BTM) and Detail Extraction Module (DEM) are used to extract primary and advanced features. Finally, the primary and advanced features are decomposed and recombined through the Feature Fusion Module (FMM), and the fused image is output. We have conducted experiments across both private and public datasets, encompassing IVF and MIF tasks, achieving commendable results.

Introduction

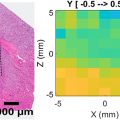

Image fusion is a technique that fuses images captured by different sensors of the same scene, which complements the lack of information in a single modal image. However, to date, there has been no research on multimodal image fusion applied to endometrial images from ultrasound (US) and optical coherence tomography (OCT). The endometrial lining, an epithelial tissue layer within the uterus, is closely linked to female fertility and reproductive health. An endometrial lining that is either excessively thick or thin can potentially impact female fertility. Measurement of endometrial thickness is therefore crucial for the clinical diagnosis of endometrial-related diseases [ ].

In clinical practice, an essential tool for the in vivo assessment of the endometrial lining is imaging examination, which directly illustrates the structural changes in the endometrial lining. The imaging process of ultrasound (US) images is a safe imaging technique that does not involve the use of any radioactive material or any form of ionizing radiation and is therefore radiation-free. Ultrasound (US) also has the advantages of high penetration and real-time imaging [ ], which can be used to obtain information about the depth of the endometrial tissue . To date, there have been many studies on segmenting the endometrium by transvaginal ultrasound (TVUS) images and performing automated measurements of endometrial thickness [ ]. However, the US is limited to 100∼150 microns due to resolution limitations, which do not allow for detailed information on the superficial tissue structure of the endometrium. But despite this ultrasound (US) images remain the imaging tool of choice for measuring endometrial thickness [ ]. Optical coherence tomography (OCT), on the other hand, is an emerging imaging technique that offers the advantage of high resolution, reaching 10–20 microns. Detailed superficial tissue information can be obtained from OCT images [ ]. However, the biggest limitation of OCT is the limited depth of penetration, which restricts its application to deeper structures or larger volumes of tissue [ , ].

If we can combine the advantages of high resolution of OCT and depth penetration of US through image fusion techniques, then a more comprehensive view of endometrial tissue can be obtained. This approach has the potential to improve diagnostic accuracy, especially in endometrial thickness measurements where both detailed surface and depth information are critical. And the application of deep learning in multimodal image fusion [ ] brings us some inspiration. In particular, the study of transformers [ , ], invertible neural networks [ , ], and multiscale dense and residual networks [ , ] lays the foundation for our work.In order to be able to fully utilize the complementary information of OCT and US images. First, we took advantage of the global attention and long-range dependence of the transformer as a way to obtain global information about the image. Secondly, in order to avoid the loss of image features, we utilize the lossless information transfer feature of reversible neural networks to prevent information loss by generating input features and output features from each other. Finally, in order to obtain and retain the rich feature information of an image, we utilize the feature extraction and retention capabilities of multiscale dense and residual networks. In the above way, we were able to maximize the integration of OCT and US image information to obtain complete endometrial tissue information, which provides convenient endometrial thickness measurement as well as medical diagnosis [ , ].

Therefore, we introduce the SDRD-Net, a symmetric dual-branch residual dense network, for the fusion of OCT and US images. Its workflow is shown in Figure 1 . Our contributions to this article are as follows:

- 1.

We propose for the first time a network, named SDRD-Net, for the fusion of OCT and US endometrial images, which can obtain more complete endometrial thickness information.

- 2.

A multi-scale residual dense block(MRDB) and a feature fusion module(FFM) have been devised to extract shallow features from various modalities and to decompose and recombine the features conclusively.

- 3.

Enhanced the INN module and integrated it with the foundational transformer module to create a dual-branch feature extraction block, aimed at extracting both primary and advanced features from various modal images.

- 4.

We experimented on both private and public datasets, achieving commendable results that underscore the superiority and adaptability of our model.

Related work

This section mainly introduces representative works of vision transformers, invertible neural networks, multi-scale dense and multi-scale residual networks, and uses them to understand the framework of this paper.

Vision transformer

The transformer was first proposed by Vaswani et al. [ ] for Natural Language Processing (NLP) and ViT for computer vision [ ]. After that, many transformer-based models have achieved satisfactory results in various fields such as target detection [ ], image segmentation [ ] and classification [ ], and image fusion [ , ]. For low-level visual tasks, the transformer combines multi-task learning [ ] and Swin transformer blocks [ ] to achieve state-of-the-art results compared to the convolutional neural network (CNN)-based approach. Other advanced networks [ , ] have shown similarly strong competitiveness.

And considering the huge computational amount of spatial self-attention in the transformer, Wu et al [ ] proposed a lightweight LT structure, which greatly reduces the number of parameters while maintaining the performance of the model by means of long- and short-range attention and flat feed-forward networks. It is used in our Basic Transformer Module (BTM).

Invertible neural networks (INN)

The Invertible Neural Network (INN) is a special type of neural network structure that enables bi-directional mapping from input to output and is reversible. INN was proposed by Dinh et al. [ ], which was the pioneering study of INN. Subsequently, to enhance image processing capabilities, Dinh et al. [ ] incorporated convolutional and multi-scale layers into the coupling layer, replacing the original with that from RealNVP. It is precisely due to the efficient reversibility and lossless information preservation characteristics of INN that it finds applications in fields such as image coloring [ ], image hiding [ ], image scaling [ ], and image/video super-resolution [ , ].

Considering the problem that INN cannot be directly applied in a convolutional neural network for feature fusion, Zhou et al [ ] designed a invertible neural module with an affine coupling layer, through which effective feature fusion can be realized. The Detail Extraction Module (DEM) we use is an improvement on this.

Multi-scale dense networks and residual networks

In In convolutional neural networks, the details of features extracted by convolution at different scales are not the same. However, the different image processing tasks cannot be solved using individual details or contour features. So multi-scale convolutional neural networks are proposed [ ] to solve this drawback, which can capture both detail and contour information. Most current deep learning-based algorithms [ ] use multi-scale convolutional neural networks. However, in multimodal image tasks, multiscale convolutional neural networks face problems such as network redundancy and the fact that image features are not extracted deeply and retained consistently. And the proposal of dense networks [ ] and residual networks [ ] gave new ideas. For example, Fu et al [ ] combine multi-scale convolutional networks, dense networks, and residual networks as a way to reduce redundancy while extracting and enhancing deep features in images. Our MRDB is also designed on this basis, with the difference that we have optimized the network structure to improve the feature extraction and retention. As shown in Figure 1 and Table 1 .

| Conv blocks | Kernel size | Strides | Paddings | Input channels | Output channels |

|---|---|---|---|---|---|

| V1 | 1 × 1 | 1 | 0 | 64 | 64 |

| V2 | 3 × 3 | 1 | 1 | 64 | 64 |

| V3 | 5 × 5 | 1 | 2 | 64 | 64 |

| V4 | 1 × 1 | 1 | 0 | 128 | 128 |

| V5 | 3 × 3 | 1 | 1 | 128 | 128 |

| V6 | 5 × 5 | 1 | 2 | 128 | 128 |

| V7 | 1 × 1 | 1 | 0 | 768 | 64 |

It is because of the advantages of feature extraction and continuous retention of multi-scale dense and residual networks that a wide range of applications have been carried out in all other image processing tasks, such as image segmentation [ ], image detection [ , ], and so on.

Method

In this section, the structure and loss function of the model in this paper are presented.

Main framework of the model

Our SDRD-Net consists of four main modules. As shown in Figure 1 , they are the multi-scale residual dense block (MRDB), the feature fusion module (FMM), the base transformer module (BTM), and the detail extraction module (DEM).MRDB leverages the benefits of multi-scale dense networks and multi-scale residual networks to enhance initial features. BTM and DEM are utilized for the extraction of primary and advanced features. Finally, the input image features are recombined and deepened by FMM for feature fusion, and then the fused image is output. Moreover, our model has a strong generalization ability and shows good results in both private and public datasets.

Multi-scale residual dense block (MRDB)

The MRDB in this paper is a new feature extraction module formed by combining the advantages of the multi-scale residual module and dense module [ ]. As shown in Figure 1 . Firstly, the residual module’s ‘skip-connect’ is utilized, which reduces redundancy and enhances feature extraction by connecting the inputs of the convolutional layers to the outputs and then directly utilizing the features of the initial layers. Although “skip-connect” increases the number of parameters, it also improves the training efficiency and convergence speed. Secondly, it utilizes the “cascade connection” of dense modules, which are more densely connected than residual modules. The ‘cascade connection’ also increases the number of parameters, but it can obtain richer feature information. But we have also optimized the network architecture to balance performance and computational efficiency to ensure that our approach does not take longer to train than the original, while not compromising the scalability of the network structure, as shown in Table 1 . Finally, a multi-scale convolution was designed, consisting of three convolutional layers of different scales, to capture the details and contour features of the image.

Also known from the experimental results in Table 3 , Therefore, MRDB significantly excels in enhancing feature extraction, capturing contour details of OCT and US images, and maintaining the integrity of their features. These advantages surpass the effectiveness of using residual or dense modules individually. The features it extracts can be expressed as:

Soct=M(OCT),Sus=M(US).

Where M (·) represents the MRDB, S oct , and S us denote the shallow features extracted from the MRDB and used as initial features for input to subsequent modules.

Feature fusion module (FMM)

The Feature Fusion Module (FMM) is a modification of the MRDB, as shown in Figure 2 . Precede MRDB with a 1×1 convolution to reduce the channel count, aiding in maintaining information integrity during feature fusion. Subsequently, in the final fusion stage, the input feature map undergoes two convolution operations and nonlinear activation to yield a final feature map.

Base transformer module (BTM)

To obtain enhanced OCT and US image feature information, we employ a base transformer module (BTM) as shown in Figure 3 . It is composed of LayerNorm, Spatial Self-Attention (SSA), and Multilayer Perceptron (MLP). Firstly, LayerNorm is employed to normalize features, it is guaranteed that the distributions or values of the features are similar for different channels. Secondly, the Spatial Self-Attention (SSA) mechanism enables the model to establish connections between different positions of the input image, capturing both global and local relationships during image processing, and deriving rich global contextual information. Finally, the features are linearly transformed using a multilayer perceptron (MLP) for higher-level feature extraction. BTM also features ‘skip-connect’, which effectively addresses the issue of gradient vanishing. Using BTM, we can establish relationships between OCT and US image features, capturing global contextual information and long-range dependencies. It can be expressed as follows:

Foct=B(Soct),Fus=B(Sus).

Where B (·) represents the BTM, F oct , and F us denote the primary features extracted from the BTM.

Detail extraction module (DEM)

Detail Extraction Module (DEM) is used to extract high-frequency detail information in the features, such as edge and texture information of the image, as shown in Figure 4 . It is expressed as:

F1oct=D(Soct),F1us=D(Sus).

Where D (·) represents the DEM, F 1 oct , and F 1 us denote the advanced features extracted from the DEM.

To best preserve a wealth of information, we employed the INN [ ] block equipped with an affine coupling layer [ ], chosen for its lossless nature, ideal for retaining image feature information. Its invertible transformation is described as follows:

Soct,k+1[d+1:D]=Soct,k[d+1:D]+T1(Soct,k[1:d]).

Soct,k+1[1:d]=Soct,k[1:d]⊙exp(T2(Soct,k+1[d+1:D]))+T3(Soct,k+1[d+1:D]).

Soct,k+1=Soct,k+1[1:d]+Soct,k+1[d+1:D].

Loss functions

The use of the loss function plays a vital role in image fusion, which helps the model to fully preserve the features of OCT and US images, such as the information of the uterine cavity edges in OCT images and the texture information of the endometrial thickness in US images. Therefore, here we have chosen three loss terms as loss functions which are feature decomposition loss <SPAN role=presentation tabIndex=0 id=MathJax-Element-7-Frame class=MathJax style="POSITION: relative" data-mathml='Lfd’>?fdLfd

L fd

[ ], intensity loss <SPAN role=presentation tabIndex=0 id=MathJax-Element-8-Frame class=MathJax style="POSITION: relative" data-mathml='Lint’>?intLint

L int

[ ], and gradient loss <SPAN role=presentation tabIndex=0 id=MathJax-Element-9-Frame class=MathJax style="POSITION: relative" data-mathml='Lgrad’>?gradLgrad

L grad

[ ]. the overall loss function of the network can be expressed as:

Ltotal=α1Lfd+α2Lint+α3Lgrad.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree