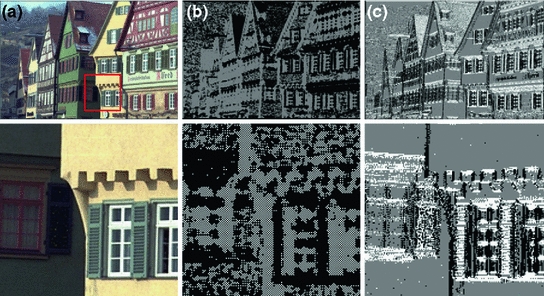

Fig. 1

Bayer CFA patterns with center pixels of a red, b blue, and c green channels

Bayer CFA patterns with center pixels of a red, b blue, and c green channels

Bayer CFA patterns with center pixels of a red, b blue, and c green channels

Bayer CFA patterns with center pixels of a red, b blue, and c green channelsFigure 1a, b and c show the  Bayer CFA patterns, which have an R, B, and G pixel at the center of the pattern, respectively. With the CFA pattern of Fig. 1a, unknown G pixel value is estimated first by considering the direction of interpolation using horizontal and vertical gradients, which are respectively defined as

Bayer CFA patterns, which have an R, B, and G pixel at the center of the pattern, respectively. With the CFA pattern of Fig. 1a, unknown G pixel value is estimated first by considering the direction of interpolation using horizontal and vertical gradients, which are respectively defined as

where G  and R

and R  represent the known G and R pixel values at (p, q) in the CFA pattern, respectively. Using the horizontal gradient

represent the known G and R pixel values at (p, q) in the CFA pattern, respectively. Using the horizontal gradient  and the vertical gradient

and the vertical gradient , the unknown G pixel value

, the unknown G pixel value  is computed as [4], [15]

is computed as [4], [15]

![$$\begin{aligned} \hat{{G}}_{ i,j} =\left\{ {\begin{array}{ll} {\begin{array}{ll} {\dfrac{G_{ i,j-1} +G_{ i,j+1} }{2}+\dfrac{2R_{i,j} -R_{i,j-2} -R_{i,j+2} }{4}, }&{} { \text {if}\,\Delta H_{i,j} <\Delta V_{i,j} } \\ \end{array} } \\ {\begin{array}{lll} {\dfrac{G_{ i-1,j} +G_{ i+1,j} }{2}+\dfrac{2R_{i,j} -R_{i-2,j} -R_{i+2,j} }{4}, }&{} { \text {if}\,\Delta H_{i,j} >\Delta V_{i,j} } \\ \end{array} } \\ \dfrac{G_{ i-1,j} +G_{ i+1,j} +G_{i,j-1} +G_{i,j+1} }{4} \\ +\dfrac{4R_{i,j} -R_{i-2,j} -R_{i+2,j} -R_{i,j-2} -R_{i,j+2} }{8}, \text {otherwise.} \\ \end{array}} \right. \end{aligned}$$” src=”/wp-content/uploads/2016/03/A308467_1_En_2_Chapter_Equ3.gif”></DIV></DIV><br />

<DIV class=EquationNumber>(3)</DIV></DIV>Figure <SPAN class=InternalRef><A href=]() 1b is similar to Fig. 1a, only with R

1b is similar to Fig. 1a, only with R  and B

and B  interchanged. Thus, with the CFA pattern of Fig. 1b, unknown G pixel value

interchanged. Thus, with the CFA pattern of Fig. 1b, unknown G pixel value  is estimated using (1)–(3), only with R

is estimated using (1)–(3), only with R  replaced by B

replaced by B  . Figure 1c shows the Bayer CFA pattern at G center pixel with unknown R and B pixel values.

. Figure 1c shows the Bayer CFA pattern at G center pixel with unknown R and B pixel values.

Bayer CFA patterns, which have an R, B, and G pixel at the center of the pattern, respectively. With the CFA pattern of Fig. 1a, unknown G pixel value is estimated first by considering the direction of interpolation using horizontal and vertical gradients, which are respectively defined as

Bayer CFA patterns, which have an R, B, and G pixel at the center of the pattern, respectively. With the CFA pattern of Fig. 1a, unknown G pixel value is estimated first by considering the direction of interpolation using horizontal and vertical gradients, which are respectively defined as

(1)

(2)

and R

and R  represent the known G and R pixel values at (p, q) in the CFA pattern, respectively. Using the horizontal gradient

represent the known G and R pixel values at (p, q) in the CFA pattern, respectively. Using the horizontal gradient  and the vertical gradient

and the vertical gradient , the unknown G pixel value

, the unknown G pixel value  is computed as [4], [15]

is computed as [4], [15] and B

and B  interchanged. Thus, with the CFA pattern of Fig. 1b, unknown G pixel value

interchanged. Thus, with the CFA pattern of Fig. 1b, unknown G pixel value  is estimated using (1)–(3), only with R

is estimated using (1)–(3), only with R  replaced by B

replaced by B  . Figure 1c shows the Bayer CFA pattern at G center pixel with unknown R and B pixel values.

. Figure 1c shows the Bayer CFA pattern at G center pixel with unknown R and B pixel values.The unknown R and B pixel values are estimated using the interpolated G pixel values, under the assumption that the high-frequency components have the similarity across three color components, R, G, and B.

To evaluate the performance of the proposed algorithm, six conventional demosaicing algorithms [8, 9, 11, 15, 16, 20] are simulated.

Gunturk et al.’s algorithm [8] is a projection-onto-convex-set based method in the wavelet domain. This method is proposed to reconstruct the color channels by two constraint sets. The first constraint is defined based on the known pixel values and prior knowledge about the correlation between color channels. The second constrain set is to force the high-frequency components of the R and B channels to be similar to that of the G channel. This method gives high PSNRs for a set of Kodak images. However, the algorithm gives poor visual quality near line edges such as fences and window frames because it has difficulty in converging to a good solution in the feasibility set by iteratively projecting onto given constraint sets and by iteratively updating the interpolated values.

Li’s algorithm [9] uses the modeling inter-channel correlation in the spatial domain. This algorithm successively interpolates the unknown R, G, and B pixel value enforcing the color difference rule at each iteration. The spatially adaptive stopping criterion is used for demosaicing artifacts suppression. This algorithm gives high PSNRs for a set of Kodak images, however sometimes with poor visual quality near line edges such as fences and window frames like Gunturk et al.’s algorithm, because of difficulty in selecting the appropriate stopping criterion of updating procedures.

Lu and Tan’s algorithm [11] improves the hybrid demosaicing algorithm that consists of two successive steps: an interpolation step to reconstruct a full-color image and a refinement step to reduce visible demosaicing artifacts (such as false color and zipper artifacts). In the interpolation step, every unknown pixel value is interpolated by properly combining the estimates obtained from four edge directions, which are defined according to the nearest same color value in the CFA pattern. The refinement step is selectively applied to demosaicing artifact-prone region, which is decided by the discrete Laplacian operator. Also it proposes a new image measurement for quantifying the performance of demosaicing algorithms.

Chung and Chan’s algorithm [15] is an adaptive demosaicing algorithm, which can effectively preserve the details in edge/texture regions and reduce the false color. To estimate the direction of interpolation for interpolating the unknown G pixel values, this algorithm uses the variance of the color differences in a local region along the horizontal and vertical directions. The interpolation direction is the direction that gives the minimum variance of color difference. After interpolation of G channel, the known R and B pixel values are estimated at G pixel sampling position in the CFA pattern.

Menon et al.’s algorithm [16] is based on directional filtering and a posteriori decision, where the edge-directed interpolation is applied to reconstruction of a full-resolution G component. This algorithm uses a five-tap FIR filter to reconstruct the G channel along horizontal and vertical directions. Then R and B components are interpolated using the reconstructed G component. In the refinement step, the low and high frequency components in each pixel are separated. The high frequency components of the unknown pixel value are replaced with the high frequency of the known components.

The proposed demosaicing algorithm gives better visual quality near line edges such as window frames and fences than conventional algorithms, because the edge direction is detected by absolute differences of absolute inter-channel differences (ADAID) between adjacent pixels in the CFA image.

In Sect. 3, we describe the proposed demosaicing algorithm using the ADAIDs. Performance of the conventional and proposed demosaicing algorithms is compared using the 24 natural Kodak images.

3 Proposed Demosaicing Algorithm

In the proposed demosaicing algorithm, the horizontal and vertical ADAIDs are computed directly from the CFA image to determine the direction of interpolation. The artifacts in the demosaiced image, which usually appear in high-frequency regions, are caused primarily by aliasing in the R/B channels, because the decimated R/B channels have half the number of pixels compared with the decimated G channel. Fortunately, high correlation among color signals (R, G, and B), i. e., inter-channel correlation, exists in high-frequency regions of color images.

3.1 Proposed Edge Direction Detection

The high-frequency components of the R, G, and B channels are large at edge and texture regions. It is assumed that the positions of the edges are the same in the R, G, and B channels. We use the absolute inter-channel difference, which is directly computed from the CFA pattern, to detect the edge direction and directional interpolation weights. The color components along the center row in Fig. 1a alternate between G and R. A similar argument can be applied to Fig. 1b and c. At each pixel, direction of interpolation is computed with neighboring pixel values along the horizontal and vertical directions, and the adaptive directional weights are estimated using the spatial correlation among the neighboring pixels along the detected direction of interpolation.

Fig. 2

Block diagram of the proposed adaptive demosaicing algorithm

Figure 2 shows the block diagram of the proposed algorithm. The first block computes ADAIDs (D  and D

and D  for edge direction detection, in which the absolute inter-channel differences

for edge direction detection, in which the absolute inter-channel differences  are used. For example, with the CFA pattern of Fig. 1a, the ADAIDs are defined along the horizontal and vertical directions, respectively, as

are used. For example, with the CFA pattern of Fig. 1a, the ADAIDs are defined along the horizontal and vertical directions, respectively, as

where R  and G

and G  represent the color intensity values at (p, q) in R and G channels, respectively, and

represent the color intensity values at (p, q) in R and G channels, respectively, and  denote absolute inter-channel differences defined in the left, right, up, and down sides, respectively. For reliable edge direction detection, the quantity is defined:

denote absolute inter-channel differences defined in the left, right, up, and down sides, respectively. For reliable edge direction detection, the quantity is defined:

where  and

and  represent local means of the horizontal (

represent local means of the horizontal ( ) and vertical (

) and vertical ( ) masks, respectively.

) masks, respectively.  is similar to the local contrast, which is related with the shape of contrast response function of the human eye [23]. If local mean is high, a small difference is negligible. Before classifying the edge direction at each pixel, we first use

is similar to the local contrast, which is related with the shape of contrast response function of the human eye [23]. If local mean is high, a small difference is negligible. Before classifying the edge direction at each pixel, we first use  to separate flat regions, where dominant edge direction is difficult to define. Along with the difference of absolute differences D

to separate flat regions, where dominant edge direction is difficult to define. Along with the difference of absolute differences D  and D

and D  , the absolute difference of relative intensities to local means

, the absolute difference of relative intensities to local means  is used. The fixed threshold th (=0.1) is used for separation of the flat region, which gives robustness to local average intensity change of an image.

is used. The fixed threshold th (=0.1) is used for separation of the flat region, which gives robustness to local average intensity change of an image.

and D

and D  for edge direction detection, in which the absolute inter-channel differences

for edge direction detection, in which the absolute inter-channel differences  are used. For example, with the CFA pattern of Fig. 1a, the ADAIDs are defined along the horizontal and vertical directions, respectively, as

are used. For example, with the CFA pattern of Fig. 1a, the ADAIDs are defined along the horizontal and vertical directions, respectively, as

(4)

(5)

and G

and G  represent the color intensity values at (p, q) in R and G channels, respectively, and

represent the color intensity values at (p, q) in R and G channels, respectively, and  denote absolute inter-channel differences defined in the left, right, up, and down sides, respectively. For reliable edge direction detection, the quantity is defined:

denote absolute inter-channel differences defined in the left, right, up, and down sides, respectively. For reliable edge direction detection, the quantity is defined:

(6)

and

and  represent local means of the horizontal (

represent local means of the horizontal ( ) and vertical (

) and vertical ( ) masks, respectively.

) masks, respectively.  is similar to the local contrast, which is related with the shape of contrast response function of the human eye [23]. If local mean is high, a small difference is negligible. Before classifying the edge direction at each pixel, we first use

is similar to the local contrast, which is related with the shape of contrast response function of the human eye [23]. If local mean is high, a small difference is negligible. Before classifying the edge direction at each pixel, we first use  to separate flat regions, where dominant edge direction is difficult to define. Along with the difference of absolute differences D

to separate flat regions, where dominant edge direction is difficult to define. Along with the difference of absolute differences D  and D

and D  , the absolute difference of relative intensities to local means

, the absolute difference of relative intensities to local means  is used. The fixed threshold th (=0.1) is used for separation of the flat region, which gives robustness to local average intensity change of an image.

is used. The fixed threshold th (=0.1) is used for separation of the flat region, which gives robustness to local average intensity change of an image.The proposed edge detection method uses two types of 1-D filters according to the pixel values as follows (for horizontal direction):

Case 1.

![$$\begin{aligned} \left[ {1 0 -1} \right] : D_H&=G_{i,j+1} -G_{i,j-1} , {\text {if}}\, R_{i,j} \ge G_{i,j-1} \ge G_{i,j+1} \\ \left[ {-1 0 1} \right] : D_H&=G_{i,j-1} -G_{i,j+1} , {\text {if}}\, R_{i,j} \ge G_{i,j+1} \ge G_{i,j-1} \\ \end{aligned}$$](/wp-content/uploads/2016/03/A308467_1_En_2_Chapter_Equ31.gif) Case 2.

Case 2.

![$$\begin{aligned} \left[ {1 0 -1} \right] : D_H =G_{i,j+1} -G_{i,j-1} , \text {if}\, G_{i,j-1} \ge G_{i,j+1} \ge R_{i,j} \\ \left[ {1 0 -1} \right] : D_H =G_{i,j+1} -G_{i,j-1} , \text {if}\, G_{i,j+1} \ge G_{i,j-1} \ge R_{i,j} \\ \end{aligned}$$](/wp-content/uploads/2016/03/A308467_1_En_2_Chapter_Equ32.gif) Case 3.

Case 3.

![$$\begin{aligned} \left[ {-1 2 -1} \right] : D_H =2R_{i,j} -G_{i,j+1} -G_{i,j-1} , \text {if}\, G_{i,j+1} \ge R_{i,j} \ge G_{i,j-1} \\ \left[ { 1 -2 1} \right] : D_H =G_{i,j-1} +G_{i,j+1} - 2R_{i,j} , \text {if}\, G_{i,j-1} \ge R_{i,j} \ge G_{i,j+1} \\ \end{aligned}$$](/wp-content/uploads/2016/03/A308467_1_En_2_Chapter_Equ33.gif) where

where  are pixel values in the dashed line box of Fig. 1a and both cases can be represented by 1-D filters along the horizontal direction. If the center pixel value R

are pixel values in the dashed line box of Fig. 1a and both cases can be represented by 1-D filters along the horizontal direction. If the center pixel value R  is much different from both pixel values G

is much different from both pixel values G  and G

and G  , operation in Eq. (4) is equivalent to intra-channel difference (Cases 1 and 2). If the center pixel value R

, operation in Eq. (4) is equivalent to intra-channel difference (Cases 1 and 2). If the center pixel value R  is between the values G

is between the values G  and G

and G  in difference color channel, operation in Eq. (4) corresponds to inter-channel difference (Case 3) and the 1-D filter is similar to a Laplacian filter. That is, if the center pixel value R

in difference color channel, operation in Eq. (4) corresponds to inter-channel difference (Case 3) and the 1-D filter is similar to a Laplacian filter. That is, if the center pixel value R  is similar to both pixel values G

is similar to both pixel values G  and G

and G  , our method detects the edge direction using adjacent pixels. Therefore, our method gives better performance for edge direction detection in the local region, which contains small structure, line and color edges.

, our method detects the edge direction using adjacent pixels. Therefore, our method gives better performance for edge direction detection in the local region, which contains small structure, line and color edges.

![$$\begin{aligned} \left[ {1 0 -1} \right] : D_H&=G_{i,j+1} -G_{i,j-1} , {\text {if}}\, R_{i,j} \ge G_{i,j-1} \ge G_{i,j+1} \\ \left[ {-1 0 1} \right] : D_H&=G_{i,j-1} -G_{i,j+1} , {\text {if}}\, R_{i,j} \ge G_{i,j+1} \ge G_{i,j-1} \\ \end{aligned}$$](/wp-content/uploads/2016/03/A308467_1_En_2_Chapter_Equ31.gif)

![$$\begin{aligned} \left[ {1 0 -1} \right] : D_H =G_{i,j+1} -G_{i,j-1} , \text {if}\, G_{i,j-1} \ge G_{i,j+1} \ge R_{i,j} \\ \left[ {1 0 -1} \right] : D_H =G_{i,j+1} -G_{i,j-1} , \text {if}\, G_{i,j+1} \ge G_{i,j-1} \ge R_{i,j} \\ \end{aligned}$$](/wp-content/uploads/2016/03/A308467_1_En_2_Chapter_Equ32.gif)

![$$\begin{aligned} \left[ {-1 2 -1} \right] : D_H =2R_{i,j} -G_{i,j+1} -G_{i,j-1} , \text {if}\, G_{i,j+1} \ge R_{i,j} \ge G_{i,j-1} \\ \left[ { 1 -2 1} \right] : D_H =G_{i,j-1} +G_{i,j+1} - 2R_{i,j} , \text {if}\, G_{i,j-1} \ge R_{i,j} \ge G_{i,j+1} \\ \end{aligned}$$](/wp-content/uploads/2016/03/A308467_1_En_2_Chapter_Equ33.gif)

are pixel values in the dashed line box of Fig. 1a and both cases can be represented by 1-D filters along the horizontal direction. If the center pixel value R

are pixel values in the dashed line box of Fig. 1a and both cases can be represented by 1-D filters along the horizontal direction. If the center pixel value R  is much different from both pixel values G

is much different from both pixel values G  and G

and G  , operation in Eq. (4) is equivalent to intra-channel difference (Cases 1 and 2). If the center pixel value R

, operation in Eq. (4) is equivalent to intra-channel difference (Cases 1 and 2). If the center pixel value R  is between the values G

is between the values G  and G

and G  in difference color channel, operation in Eq. (4) corresponds to inter-channel difference (Case 3) and the 1-D filter is similar to a Laplacian filter. That is, if the center pixel value R

in difference color channel, operation in Eq. (4) corresponds to inter-channel difference (Case 3) and the 1-D filter is similar to a Laplacian filter. That is, if the center pixel value R  is similar to both pixel values G

is similar to both pixel values G  and G

and G  , our method detects the edge direction using adjacent pixels. Therefore, our method gives better performance for edge direction detection in the local region, which contains small structure, line and color edges.

, our method detects the edge direction using adjacent pixels. Therefore, our method gives better performance for edge direction detection in the local region, which contains small structure, line and color edges.

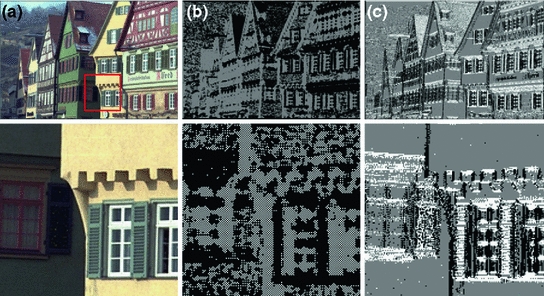

Fig. 3

Edge direction detection (Kodak image no. 8). a original image, b edge map of the conventional algorithm [15] (black vertical, white horizontal), c edge map of the proposed algorithm (black vertical, white horizontal, gray flat)

Figure 3 shows the result of edge direction detection, where black, white, and gray represent the vertical edge, horizontal edge, and flat regions, respectively. The bottom row shows enlarged cropped images of the images in the top row. Figure 3a shows the original image of Kodak image no. 8, which contains a number of buildings with sharp edges of horizontal and vertical directions. Figure 3b shows the edge map of the conventional algorithm [15], which has two edge directions (vertical: black, horizontal: white). Figure 3c shows the edge map of the proposed algorithm (vertical: black, horizontal: white, flat: gray), which gives sharp edge lines and represents the structure of wall and windows well. Note that in our method the flat region (gray) is well separated, where the direction of edges is not dominant. Therefore, the proposed demosaicing algorithm has a good performance in images with a lot of structure, especially in regions with line edges such as window frames. In the flat region, the proposed algorithm simply uses the bilinear interpolation and does not perform refinement step (Sect. 3.3 Refinement), thus reducing the total computation time and the memory size.

Table 1

Performance comparison of the proposed and conventional algorithms (PSNR, unit: dB, 24 Kodak images)

Image | Bilinear [20] | Gunturk et al.’s | Li’s algorithm | Lu and Tan’s | Chung and Chan’s | Menon et al.’s | Proposed | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Algorithm [8] | [9] | algorithm [11] | algorithm [15] | algorithm [16] | algorithm | ||||||||||||||||

R | G | B | R | G | B | R | G | B | R | G | B | R | G | B | R | G | B | R | G | B | |

1 | 25.3 | 29.6 | 25.4 | 37.4 | 40.1 | 37.1 | 36.6 | 40.7 | 37.8 | 32.4 | 36.8 | 35.5 | 34.5 | 36.0 | 34.7 | 36.1 | 38.1 | 36.4 | 35.7 | 39.3 | 36.4 |

2 | 31.9 | 36.3 | 32.4 | 38.5 | 40.6 | 39.0 | 35.4 | 39.9 | 39.0 | 32.5 | 40.6 | 39.0 | 37.2 | 41.3 | 39.9 | 38.1 | 43.1 | 41.3 | 38.4 | 43.3 | 41.1 |

3 | 33.5 | 37.2 | 33.8 | 41.6 | 43.5 | 39.8 | 38.7 | 41.8 | 38.8 | 34.0 | 41.4 | 38.8 | 40.6 | 43.1 | 39.9 | 41.3 | 44.7 | 40.7 | 41.8 | 44.5 | 41.3 |

4 | 32.6 | 36.5 | 32.9 | 37.6 | 42.3 | 41.4 | 36.0 | 42.4 | 40.8 | 37.3 | 41.0 | 34.4 | 36.8 | 41.5 | 40.9 | 37.0 | 43.0 | 41.9 | 37.6 | 43.5 | 41.6 |

5 | 25.8 | 29.3 | 25.9 | 37.8 | 39.6 | 35.5 | 35.1 | 37.6 | 34.7 | 32.8 | 37.9 | 35.5 | 35.0 | 36.5 | 34.5 | 36.8 | 39.4 | 36.1 | 36.2 | 39.3 | 36.3 |

6 | 26.7 | 31.1 | 27.0 | 38.6 | 41.4 | 37.3 | 37.2 | 41.3 | 36.6 | 32.8 | 37.8 | 35.4 | 37.0 | 38.4 | 36.5 | 39.0 | 40.7 | 37.9 | 36.5 | 40.0 | 36.4 |

7 | 32.6 | 36.5 | 32.6 | 42.2 | 43.7 | 39.6 | 39.9 | 42.3 | 38.9 | 34.3 | 41.6 | 38.8 | 40.4 | 42.2 | 39.7 | 41.2 | 44.0 | 40.6 | 42.3 | 45.3 | 41.6 |

8 | 22.5 | 27.4 | 22.5 | 35.5 | 38.2 | 34.3 | 34.3 | 38.3 | 34.9 | 31.4 | 35.7 | 33.4 | 32.4 | 34.5 | 32.6 | 34.5 | 37.1 | 34.6 | 32.1 | 36.3 | 32.4 |

9 | 31.6 | 35.7 | 31.4 | 41.4 | 44.0 | 40.7 | 40.2 | 42.7 | 40.6 | 39.8 | 41.6 | 34.3 | 40.0 | 42.3 | 40.9 | 40.9 | 44.1 | 42.7 | 40.7 | 43.9 | 41.1 |

10 | 31.8 | 35.4 | 31.2 | 41.4 | 44.4 | 40.6 | 39.9 | 43.5 | 40.4 | 39.6 | 41.8 | 34.3 | 39.5 | 42.0 | 40.3 | 40.7 | 44.4 | 41.8 | 40.9 | 44.6 | 41.4 |

11 | 28.2 | 32.2 | 28.3 | 38.8 | 41.1 | 38.4 | 37.3 | 41.3 | 38.4 | 33.3 | 38.7 | 37.3 | 36.5 | 38.4 | 37.1 | 38.0 | 40.6 | 38.7 | 37.4 | 40.9 | 38.2 |

12 | 32.7 | 36.8 | 32.4 | 42.5 | 44.8 | 41.4 | 40.8 | 44.3 | 41.1 | 34.4 | 41.7 | 39.3 | 40.7 | 43.2 | 41.3 | 41.7 | 45.2 | 42.4 | 41.3 | 45.2 | 41.6 |

13 | 23.1 | 26.5 | 23.0 | 34.5 | 36.9 | 33.0 | 35.2 | 38.2 | 33.6 | 30.8 | 33.2 | 31.6 | 31.3 | 32.2 | 30.8 | 33.1 | 34.2 | 32.2 | 32.2 | 35.1 | 32.1 |

14 | 28.2 | 32.0 | 28.6 | 35.8 | 37.8 | 34.0 | 32.5 | 35.9 | 33.4 | 32.2 | 38.2 | 35.8 | 34.7 | 37.4 | 34.4 | 35.3 | 39.1 | 35.6 | 35.4 | 39.2 | 35.4 |

15 | 31.1 | 35.4 | 32.3 | 38.1 | 40.3 | 38.8 | 35.2 | 40.3 | 38.7 | 32.4 | 39.7 | 37.8 | 36.7 | 40.6 | 39.4 | 37.0 | 42.0 | 40.0 | 37.2 | 42.6 | 39.7 |

16 | 30.3 | 34.7 | 30.4 | 41.9 | 44.8 | 40.6 |

|||||||||||||||