Abstract

Informatics is the science and practice of computer information systems. Imaging informatics encompasses the use of information technology to deliver efficient, accurate, and reliable medical imaging services within a healthcare network. Radiologists have key roles as leaders in imaging informatics, being a liaison between the clinical needs of a department and the applicability of an information technology team. This chapter reviews the major components of imaging informatics. The electronic infrastructure will be introduced, spanning physician order entry via an electronic medical record, radiology information systems, and picture archiving and communication systems and their technical standards of intercommunication. Also discussed is the radiologist reading room environment, including image display requirements, workstation ergonomics, and related health concerns. Radiologists’ workflow beyond image review and interpretation at the workstation involves report creation using standardized language and structured reporting, as well as postprocessing applications including advanced three-dimensional imaging and computer-aided detection. Finally, postreporting computer applications will be introduced, including data mining, peer review, and critical results communication.

Keywords

DICOM (Digital Imaging and Communications in Medicine), imaging informatics, picture archiving and communication system (PACS), radiology technical standard, RIS (radiology information system), structured reporting, workstation ergonomics

Informatics is the science and practice of computer information systems. Imaging informatics encompasses the use of information technology to deliver efficient, accurate, and reliable medical imaging services within a healthcare network. Its imprint is felt in every step of the process of patient imaging, from order entry to results communication. Radiologists have key roles as leaders in imaging informatics and are the liaisons between the clinical needs of the healthcare enterprise and the applicability of an information technology team. Ultimately, radiologists can serve as innovators in a constantly evolving field to optimize the flow of medical information within a radiology department and throughout a healthcare institution.

This chapter is devoted to introducing the major components of imaging informatics. The workflow cycle begins with physician order entry, usually using the hospital information system (HIS) or electronic medical record (EMR). Data necessary to support imaging workflow are communicated to radiology departments via the radiology information system (RIS). The picture archiving and communication system (PACS) then organizes medical images for review by radiologists or other healthcare providers, as well as long-term storage ( Fig. 17.1 ). The technical standards involving the organization and communication of these systems will be discussed.

The radiologist reading room environment will also be reviewed. The basic viewing requirements of modern reading room workstations will be introduced, as well as the ergonomics of desktop computer stations and related health concerns. Radiologists’ workflow beyond image review and interpretation at the workstation involves multiple postprocessing applications, including advanced three-dimensional (3D) imaging and computer-aided detection. The software and hardware components of imaging reporting are varied and often vendor specific; however, there are physician-driven initiatives for report dictation that rely heavily on the standardized language and structure of reporting. Finally, postreporting computer applications will be introduced, including data mining, peer review, and critical results communication.

The breadth of imaging informatics covers practically all aspects of radiology. In one sense, every part of the radiologist’s reporting workflow outside of image interpretation involves informatics. The depth of these topics reaches beyond the goals of this chapter. As an introduction, these outlined topics will create a foundation of nomenclature and technologic components that can be built upon throughout a career in radiology.

Healthcare and Radiologic Information Systems

Hospital Information System and Electronic Medical Record

A medical imaging study begins with a patient-physician encounter that generates an order or prescription. From within a healthcare enterprise, patient demographic and medical data are collected and distributed by a HIS, which may or may not be completely computerized and paperless. An EMR is such a data system that is completely paperless. In an EMR environment, the imaging study can be ordered by clinicians via computer. Such a computerized physician order entry carries the potential advantages of providing relevant patient history and providing point-of-need decision support, such as image exam appropriateness criteria.

Radiology Information Systems

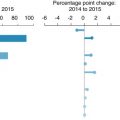

Regardless of the method of study order, radiology study orders must be communicated to a RIS. A RIS is a computer application that manages patient demographic data and scheduling and tracks associated images and reporting results. Once an imaging study order is entered into a RIS, the study is associated with a unique identifier code such as an accession number. The accession number and medical record number allow unambiguous association of the image dataset with the correct patient demographics; this also allows coordination of the patient’s scheduling and imaging encounter at the imaging modality. This system enables a patient study to be accessed at any site of a radiology department network for acquisition and communication of results and images back to the HIS/EMR. In addition, many of the business/operational analytics (scorecards, dashboards, and reports) necessary to monitor operational quality/efficiency are generated by the RIS or depend on RIS data.

Picture Archiving and Communication Systems

The RIS organizes patient data regarding a study but does not include the images themselves. The PACS is the information technology architecture that orchestrates the workflow of image acquisition, display, and storage across a network. Modern PACSs have largely replaced the need for hard copy film creation and transportation. The PACS also allows for quality improvement initiatives through additional software programs. PACSs have four main components:

- 1.

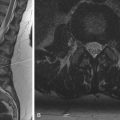

The imaging modalities (radiography, computed tomography [CT], magnetic resonance imaging [MRI], ultrasound, etc.)

- 2.

A secure transmission network

- 3.

Computer workstations for viewing and manipulating images

- 4.

Digital archives for storing images and reports for later retrieval

Ideally, one PACS serves all of the imaging modalities across an institution’s radiology services. Once imaging modalities acquire images scheduled by the RIS, image data is sent to the PACS database server. PACS servers typically communicate through a local area network (LAN) to various workstations, where images can be reviewed by radiologists, technologists, or caregivers throughout a health network. Networks, as well as digital archives, must maintain the security of patient data according to the regulations defined by the Health Insurance Portability and Accountability Act. A LAN maintains relatively high privacy as all computers on a network are physically wired to servers within a protective enterprise firewall. Large healthcare centers with multiple hospitals may have several LANs that intercommunicate and comprise a wide area network. In contrast, radiologists or caregivers may want to view images from outside a LAN, such as logging in through the Internet from home. In this case, a virtual private network (VPN) can be created. A VPN connects to the LAN with a comparable level of security to allow access to the PACS or EMR from outside the wired network.

PACS database servers send patient imaging studies to computer workstations for healthcare worker use and to archival storage. At larger institutions, storage servers can be maintained off-site at dedicated data centers. Archival storage must be secure, scalable, and have redundancy or backup. The performance of storage servers is dependent on the media technology used; however, the decreasing cost of magnetic spinning disc drives has allowed for its use to become preferred and commonplace. Current and near future enterprise requirements have driven the need for a scalable enterprise image/multimedia archive and image consumption architecture. Examples include the vendor-neutral archive, archive-neutral PACS vendors, and PACS-neutral archives.

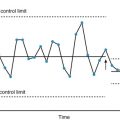

PACS software packages include the radiologist’s tools for effectively and rapidly interpreting images. Preset window and level settings and hanging protocols are customizable for end users to fit individual personal preferences. Measurement tools, zoom, pan, and window adjustment are a few of the many assessment features needed. PACSs must also display patient information and prior studies, and their reports must be available. Modern offerings go beyond simple image study presentation and navigation and attempt to support more complex radiology workflow orchestration (e.g., real-time decision support, advanced visualization, advanced communication/collaboration, analytics, peer review).

Radiology Information Systems: Picture Archiving and Communication Systems Integration

There is clearly a need for RIS and PACS to communicate efficiently. Some vendors offer hybrid products comprising both RIS and PACS; however, that is not always the case. In scenarios where health networks work with different vendors, the radiologists’ workflow is derived by either the RIS or the PACS as the primary source of truth . A RIS-driven workflow seems intuitive based on having patient and study information and being the primary tool for schedulers and technologists. However, a PACS-driven workflow would use the imaging studies themselves to drive a worklist and would be within the software workspace that radiologists mainly use, acquiring additional data from the RIS via the accession number or other identifier. Regardless of the method used, optimized and localized integration of RIS-PACS to offer efficient workflows for both radiologists and technologists is vital for a reliable and accurate department system.

Technical Standards

For the various components of a healthcare system to communicate effectively, there are technical standards that enable the interoperability of different systems, both within radiology and throughout the enterprise. Standards are maintained and updated by corresponding associations and are continually evolving to improve efficient communication.

Health Level 7

Healthcare networks have many components in an information system, including the EMR, RIS, order entry systems, laboratory information systems, etc. Health Level 7 (HL7) is the computer standard governing the communication of these various information systems within and between healthcare networks. HL7 is responsible for communicating with a RIS and sharing information with an EMR. In imaging, this encompasses study ordering, registration, and results communication. HL7 characterizes each interaction as an event and can disseminate the associated message electronically to other systems.

Digital Imaging and Communications in Medicine

Although HL7 is the standard spanning the breadth of healthcare, Digital Imaging and Communications in Medicine (DICOM) is the technical standard for display, storage, and transmission of medical images. DICOM began as a collaborative effort by the American College of Radiology (ACR) and National Electrical Manufacturers Association in the early 1980s and was renamed DICOM in 1993. Now it has become the universal standard data format for images and communications among all medical imaging devices and software applications. A DICOM image also contains information regulated by the standard: media display, security profiles, data storage, and data encoding and exchange.

DICOM has been the critical enabler for interoperability of hardware and software spanning all aspects of radiology workflow (image acquisition modalities, PACS servers, workstations, networks). DICOM also allows image databases to be shared as PACSs develop and expand and maintain communication with other information systems.

DICOM does not have a centralized body to certify or enforce implementation of the standard. It is up to various vendors to conform to the standard. Although the standard can be followed, the mechanisms of use are not specified. Vendors almost universally provide DICOM conformance statements that explicitly state how a specific vendor’s offering supports DICOM. Also, vendors may opt to carry additional proprietary technical parameters, which may impact interoperability.

Integrating the Healthcare Enterprise

Despite the presence of HL7 and DICOM standards, there are still variations in interconnectivity and efficiency. An initiative called Integrating the Healthcare Enterprise (IHE) began in 1998 with a goal to improve the communication of different standards. IHE does not create its own standard but instead promotes the coordinated and best practice use of established standards. IHE identifies common system integration challenges requiring HL7-DICOM or other communication and creates an IHE profile of an expected technical workflow that can consistently deliver expected results. IHE initiatives grow in parallel to updates from HL7 and DICOM, and compliance can further increase an accurate and efficient workflow.

Image Data Compression

Image data can be electronically compressed into smaller files by mathematical algorithms, with a goal of decreasing storage needs and image transfer time. Image compression can be lossless, which is reversible, or lossy, which is irreversible.

Lossless image data storage is used by many PACS systems and removes any doubt regarding potential loss in diagnostic quality of medical images. Lossless compression involves removing redundant data, and a commonly known example file is the Graphics Interchange Format. The degree of compression is limited, however, and is typically in the range of 1.5:1 to 3:1.

Lossy image compression methods remove potentially relevant pixel data from image files. The compression ratio improves to greater than 10:1. Joint Photographic Experts Group (JPEG) uses lossy image compression and is widely applied in digital photography, with minimal impact on image quality. JPEG is also supported by the DICOM standard. Studies have shown that some lossy image compression techniques can be used effectively in medical imaging without an impact on diagnostic relevance.

Lossy image compression that does not affect a particular diagnostic task is referred to as diagnostically acceptable irreversible compression. The ACR does not have a general advisory statement on the type or amount of irreversible compression to be used to achieve diagnostically acceptable irreversible compression, and only methods defined and supported by the DICOM standard should be used, such as JPEG, JPEG-2000, or Moving Picture Experts Group. In addition, the US Food and Drug Administration (FDA) requires that images with lossy compression are labeled, including the compression ratio and method used. The FDA prohibits the use of lossy compression of digital mammograms for interpretation, although lossy compression can be used for prior comparison studies.

Image Display

To support numerous computer workstations throughout a department or institution, many PACS systems use thin client software or web-based platforms for viewing images to minimize the hardware memory requirements of individual computers. For interpreting radiologists, workstations must, in addition, meet minimum requirements for image display characteristics ( Table 17.1 ). Liquid crystal display (LCD) monitors are ubiquitous, with high-resolution flat panels that can absorb ambient light and minimize glare and reflection.

| Characteristic | ACR Requirement/Recommendation |

|---|---|

| Color/grayscale depth | 8 bit |

| Luminance | Lmax >350 cd/m 2 Lmin 1.0 cd/m 2 LR >250 |

| Mammography | Lmax >420 cd/m 2 Lmin 1.2 cd/m 2 |

| Pixel pitch | <0.21 mm; 0.20 mm recommended |

| Aspect ratio | 3:4 or 4:5 ideal |

Color and Grayscale Contrast

Color or grayscale contrast is characterized by bit depth to describe the number of colors or grayscale levels in an image pixel. An 8-bit system means that a pixel is comprised of an 8-bit byte. For example, a true-color 8-bit system assigns eight specific colors (three red, three green, two blue; red, green, blue [RGB] palette), which in combination form 2 8 colors, a 256-RGB color palette. In grayscale, all RGB values are equal for 256 shades of grayscale. Higher-bit-depth displays such as 30-bit systems are available and require support of the operating system and graphics card. No evidence has shown that radiologic interpretations are affected by the use of greater than 8-bit systems.

Color systems require pixels formed by color elements that are not precisely superimposed, resulting in pixels that may lose sharpness compared to grayscale monitors. The use of color displays has grown and is at times favored, such as in Doppler ultrasound and nuclear fused positron emission tomography and computed tomography images. The technology for comparable display brightness and sharpness has improved recently to be similar in quality to grayscale displays; however, color displays that also provide superior grayscale performance remain more expensive, and grayscale displays are still common and practical.

Luminance

The brightness and contrast of grayscale images are affected by the monitor luminance for a given grayscale value. Luminance is a measure of light intensity, and the standardized unit is the candela per square meter (cd/m 2 ), or nit. LCD monitors have luminance properties based on the light source and panel properties. LCD panels are lit by cathode fluorescent lamps or newer light-emitting diodes, which have lower power needs and potentially longer life. Regardless of source, monitor backlight luminance decays over time and requires periodic quality control. Monitors carry a maximum luminance dictated by the light source, Lmax, which is the luminance for the maximum gray value. Minimum luminance, Lmin, is the luminance of the darkest gray level and is dictated by the panel’s ability to block the light source. The ACR has an established standard for Lmax of a white level of 350 cd/m 2 or greater for diagnostic viewing and 420 cd/m 2 for mammography and an Lmin baseline of at least 1.0 cd/m 2 or 1.2 cd/m 2 for mammography.

The luminance ratio (LR) is the ratio of Lmax to Lmin, and a high LR is required for good image contrast. LR should always be greater than 250, and an LR of 350 is considered effective. If the LR or Lmax is too high, however, the human eye will acclimate to the brightness, and subtle darker imaging findings can be missed. On the other hand, if the Lmin is too low, dark grayscale levels cannot be differentiated. The DICOM standard covers grayscale bit depth based on a nonlinear perception model. Because humans can better differentiate between lighter grayscale levels than darker levels, adjacent darker levels are assigned greater differences in luminance compared to lighter pixels.

Pixel Pitch and Resolution

Display monitors have a resolution typically characterized by the number of pixels (picture elements). Medical displays are often labeled as 2-, 3-, or 5-megapixel (MP) monitors based on the number of pixels in the display, with a pixel dimension such as 1600 × 1200 (pixels in the width of the monitor × height). However, it is more useful to characterize a monitor by its display size (diagonal length) and pixel pitch. Pixel pitch is the measure of one side of a pixel square. In addition, the perception of an image also depends on the viewing distance from the monitor.

The ACR standard recommends a pixel pitch of 0.20 mm for diagnostic interpretation and no larger than 0.21 mm. If the pixel pitch is too large, the image may appear pixelated with a fine grainy pattern of staircase artifacts. There is no advantage to using a smaller pixel pitch and doing so will result in smaller images when displayed at full resolution. Medical images are acquired with a resolution of varying detector element sizes that are different from a monitor’s pixel pitch. In such instances, zoom and pan presentation tools should be used and may show subtle detail not detectable at initial image size.

For example, a CT image may have a resolution of 0.25 MP. When viewed at a diagnostic workstation with a 2-MP monitor, the image can be viewed at full resolution of one image pixel per display pixel. Zooming in and magnifying such an image will quickly make artifacts visible due to individual pixel identification. On the other hand, a chest radiograph image typically has an image resolution of 5 MP. When viewed on a 2-MP display, the image is minified to fill the size of the display. This can be routinely done without losing diagnostic information. However, if there is an area of abnormality, digitally zooming in on the area expands the region to its full pixel resolution, maintaining a smooth visual appearance and maximizing the image details. The routine minification of radiographs for monitor display is termed down-sampling.

Display Size

Radiologists interpreting images focus on the center of a display, but attention to the edges is also done using peripheral vision. The optimal visualization of a display is achieved when the display size is approximately 80% of the viewing distance. A workstation with a viewing distance of 25 to 35 inches (64–89 cm) corresponds to a monitor size of 21 inches (53 cm).

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree