Fig. 1

Modern game design has many aspects to take care of

Visual design in game development usually needs to be considered from two aspects. In the one side, most modern games have narrative elements which define a stylish time-space context to host the imagination of graphic designers, and make games less abstractive and richer in entertainment value. Hence, visual designer needs to highlight these aspects in their designs with appropriate visual impact. On the other side, nowadays game design is user-centered, and visual design has to be adapted to capture the attention of users in an entertaining way. Combining both together, we then have an open question for visual game design: how could a visual design highlight what the game designer wants to emphasize and on the other side, capture user’s attention? Naturally, this leads to a well-known topic, visual saliency estimation.

1.2 Visual Saliency

Visual saliency is a multi-disciplinary scientific terminology across biology, neurology and computer vision. It refers to the mechanism about how salient visual stimuli attract human attention. Complex biological systems need to rapidly detect potential prey, predators, or mates in a cluttered visual world. However, it is computationally unaffordable to process all interesting targets in one’s visual field simultaneously, making it a formidable task even for the most evolved biological brains [5], let alone for any existing computer or robot. Primates and many other animals have adopted a useful strategy to limit complex vision process to a small area or a few objects at any one time. These small regions are referred as salient regions in this biological visual process [6].

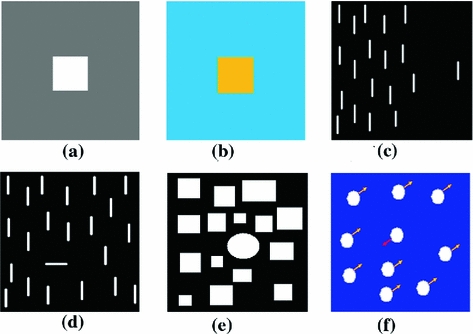

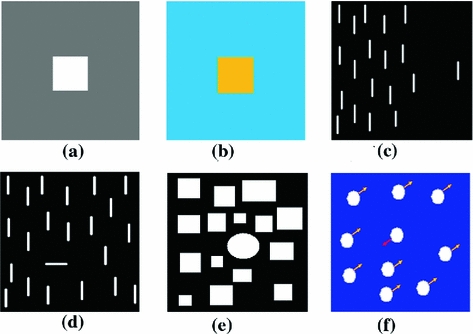

Visual salience is the consequence of an interaction of a visual stimulus with other visual stimuli, arising from fairly low-level and stereotypical computations in the early stages of visual processing. The factors contributing to salience are generally quite comparable from one observer to the next, leading to similar experiences across a range of observers as well as of behavioral conditions. Figure 2 gives several examples about how visual salience is produced from various contrasts among visual stimuli (or pixels) in an image or a pattern. Figure 2a demonstrates intensity-based salience, where the center rectangle has different intensity in contrast with its surrounding. Figure 2b is the case of color-based salience. Figure 2c shows the location of an item may produce salience as well. Figure 2d–e demonstrates other types of salience caused by orientation, shape and motion. In summary, we can see that salience usually refers to how different a pixel or region is in contrast to its surrounding.

Fig. 2

Visual saliency may arouse from intensity, color, location, orientation, shape, and motion

Visual salience is a bottom-up, stimulus-driven process that declares a location being so different from its surroundings as to be worthy of your attention [7, 8]. For example, a blue object in a yellow field will be salient and will attract attention in a bottom-up manner. A simple bottom-up framework to emulate how salience may be computed in biological brains has been developed over the past three decades [6–9]. In this framework, incoming visual information is first processed by early visual neurons that are sensitive to the various elementary visual features of the stimulus. The preprocessing procedure is often operated in parallel over the entire visual field and at multiple spatiotemporal scales, producing a number of initial cortical feature maps that embody the amount of a given visual feature at any location in the visual field and highlight locations significantly different from their neighbors. Finally, all feature maps are fused into a single saliency map which represents a pure salience signal through weighted neural network [8]. Figure 3 illustrates this framework to produce a saliency map from an input image.

Fig. 3

An early salience model to emulate human perception system

The combination of feature maps can be carried out by a winner-take-all scheme [8], where feature maps containing one or a few locations of much stronger response than anywhere else contribute strongly to the final perceptual salience. The formulations of this basic principle can have slightly different terms, including defining salient locations as those which contain spatial outliers [10], which may be more informative in Shannon’s sense [11].

2 Color-Based Visual Saliency Modeling

As it has been discussed in the previous section, visual saliency can be related to motion, orientation, shape, intensity and color. When visual saliency is concerned in video game design, it is mostly related to color images and graphics, where color-aroused saliency becomes the dominant factor. In this section, we introduce the definition of color saliency and its various models.

2.1 Previous Work

From the viewpoint of a graphics designer, it is desired to have a quantifiable model to measure visual saliency, other than to understand its biologic process fully. Actually an accurate modeling of visual saliency has been sought after by computer scientists. Nearly three decades ago, Koch and Ullman [8] proposed a theory to describe the underlying neural mechanisms of vision and bottom-up saliency. They posited that human eyes selects several features that pertain to a stimulus in the visual field and combines these features into a single topographical ‘saliency map’. In the retina, photoreceptors, horizontal, and bipolar cells are the processing elements for edge extraction. Visual input is passed through a series of these cells, and edge information is then delivered to the visual cortex. These systems combine with further processing in the lateral geniculate nucleus that plays a role in detecting shape and pattern information such as symmetry, as a preprocessor for the visual cortex to find a saliency region [12]. Therefore, saliency is a biological response to various stimuli.

Visual saliency has been a multidisciplinary topic for cognitive psychology [6], neurobiology [12], and computer vision [10]. As described in Sect. 5, most early work [13–23] pays more efforts to build their saliency models on low-level image features based on local contrast. These methods investigate the rarity of image regions with respect to local neighbours. Koch and Ullman [8] presented the highly influential biologically inspired early representation model, and Itti et al [9] defined image saliency using central surrounded differences across multi-scale image features. Harel et al [14] combined the feature maps of Itti et al with other importance maps and highlighted conspicuous parts. Ma and Zhang [15] used an alternative local contrast analysis for saliency estimation. Liu et al [16] found multi-scale contrast in a Difference-of-Gaussian (DoG) image pyramid.

Recent efforts have been made toward using global visual contrast, most likely in hierarchical ways. Zhai and Shah [17] defined pixel-level saliency based on a pixel’s contrast to all other pixels. Achanta et al [18] proposed a frequency tuned method that directly defines pixel saliency using DoG features, and used mean-shift to average the pixel saliency stimuli to the whole regions. More recently, Goferman et al [20] considered block-based global contrast while global image context is concerned. Instead of using fixed-size block, Cheng et al [21] proposed to use the regions obtained from image segmentation methods and compute the saliency map from the region-based contrast.

2.2 Color Saliency

Color saliency [21] refers to the salient stimuli created by color contrast. Principally, it can be modeled by comparing a pixel or a region to its surrounding pixels in color space. In mathematics, the pixel-level color saliency can be formulated by the contrast between a pixel and all other pixels in the global range of an image,

Where typically  stands for the color distance between the i-th and k-th pixels,

stands for the color distance between the i-th and k-th pixels,

N is the number of pixels in an image, and  and

and  are the feature vectors of the k-th and i-th pixels.

are the feature vectors of the k-th and i-th pixels.

(1)

stands for the color distance between the i-th and k-th pixels,

stands for the color distance between the i-th and k-th pixels,

(2)

and

and  are the feature vectors of the k-th and i-th pixels.

are the feature vectors of the k-th and i-th pixels.

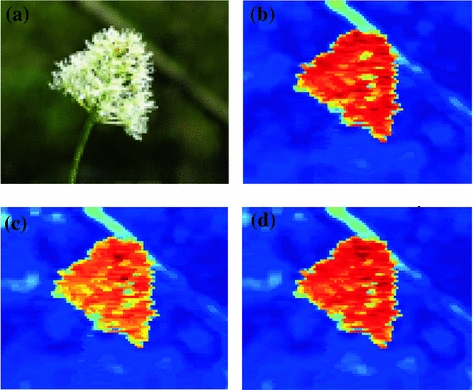

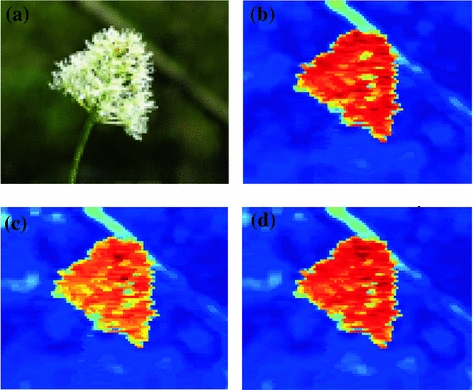

Fig. 4

Color saliency estimation. Here, ‘jet’ color map is applied to visualize grey-scale saliency values on pixels (similarly for the rest figures in this chapter)

There are several typical color spaces that can be applied to measure the color saliency. RGB is the conventional format. Besides, we may have HSV, YUV and Lab color systems. Sometimes they can be combined together to form a multiple channel image data. Figure 4 gives such an example to show how these different color systems produce different color saliency. Figure 4a is the sample image, Fig. 4b shows the estimated saliency from Eq. (1) in RGB space, Fig. 4c gives the saliency map in HSV space, and Fig. 4d shows the saliency in RGB+HSV space. In this example, we can see that different color systems do bring out some differences in their saliency maps.

2.3 Histogram Based Color Saliency

A major drawback of the above computational model of color saliency is its computing time. While each pixel needs to be compared with all other pixels, its computational complexity is increased to  . It may take long latency time even for a small-size image.

. It may take long latency time even for a small-size image.

. It may take long latency time even for a small-size image.

. It may take long latency time even for a small-size image.To speed up the computation, a trick using histogram can then be applied. Other than directly computing the color saliency of every pixel, we can compute the saliency of all possible colors first, and use the color of pixel to index its saliency from the pre-computed table. Here, all possible colors in true color space may have

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree