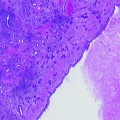

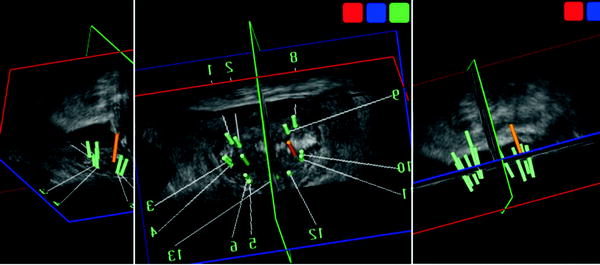

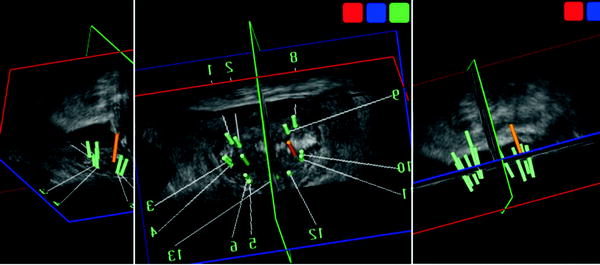

Fig. 9.1

Robotic tracking device (Artemis™ system) to document biopsy trajectory in reconstructed 3D model. Robotic tracking device (Eigen) of a 2D end-fire TRUS probe allows the navigation of 3D mapping biopsy to register the spatial location of biopsy trajectory on the initially reconstructed 3D model of the prostate (upper three figures). This system also facilitates MR/TRUS image fusion-targeted biopsy with the delineation of the MR-suspicious area with the integration of 3D biopsy tracking and registration technology (lower three figures)

In this system, reconstruction of the 3D prostate model requires 200 degree rotation of the 2D end-fire TRUS probe across the surface of the prostate by the tracking robotic arm. Using the volume data of the acquired TRUS images, the computer-software reconstructs the 3D prostate model, after the procedure for segmentation of the prostate. The actual firing of the biopsy needle is monitored and captured by a real-time 2D image, and then, each biopsy trajectory in the real-time 2D image can be registered and overlaid on the initially reconstructed 3D prostate model. Importantly, patient immobility, prostate position, and prostate deformation after the initial reconstruction of the 3D prostate model are critical for the accurate registration of each biopsy trajectory on the 3D model, because of the potential dislocation between the initially reconstructed 3D prostate model and the actual location of the prostate at each biopsy. Once the biopsy trajectories have been recorded in the 3D prostate model, the digital 3D model with the registered biopsy trajectories can be used in the future, after a certain time interval, for revisiting previously recorded sites for repeat biopsy options. In this process, after a certain time interval, the system allows overlaying the previous biopsy sites on the current 3D prostate model, and then, performing resampling from the same site as the previous positive biopsy site, or sampling from different sites from previous negative biopsy sites. In order to achieve precise revisiting using the previous digital 3D model, the challenge is to register precisely the current reconstructed 3D model of the prostate with the previously reconstructed 3D model of the prostate, since the prostate is a mobile soft tissue organ with the deformation or natural growth in size. There is also potential disagreement in the prostate segmentation procedures between previous and current reconstructions of the 3D prostate model.

The recent report on the clinical use of the Artemis™ system [32] presented an initial experience of 171 men undergoing Artemis system-guided biopsy. Interestingly, the 11 patients who underwent the 3D TRUS-Artemis biopsy were later re-biopsied to evaluate the ability of the Artemis device to guide biopsy needles back precisely to the area at which prior biopsy cores had been taken. The ability to return to prior biopsy sites was mathematically within 1.2 mm on the digital model by geometric analysis. However, the error was determined only by mathematical comparison between the registered digital images of the previous and revisited biopsy trajectories in the computed model, but unfortunately in the study it was not determined by the analysis of the real anatomical sampling sites in the human prostate tissues between the previous and revisited biopsies. Since the reported revisiting accuracy of 1.2 mm is only the outcome of mathematical image analysis in the registered digital model, the actual revisiting sampling accuracy of this system in the real prostate tissues is still pending. There are multiple factors to cause certain errors in 3D localization between the previous and current targeting, which include the processes of segmentation, registration, patient shift, prostate motion, prostate deformation, robotic arm tracking, needle bending, and so on. Even so, the Artemis™ system offers a powerful promising opportunity of the image-based 3D mapping of prostate cancer, using a digitalized 3D prostate model with real-time tracked and documented biopsy trajectory, potentially improving the current and future practice of prostate biopsy and focal therapy. The emerging generation of the Artemis robotic arm achieves light and easy handling for more physician-friendly manipulation of the TRUS probe (Fig. 9.2).

Fig. 9.2

A new generation of the mechanical tracking device of 2D TRUS probe. A new generation of the Artemis™ robot arm achieves light and easy handling for more physician-friendly manipulation of the TRUS probe. The system consists of a 4-freedum robotic arm to control and track the orientation of a 2D end-fire TRUS probe

To preserve the accuracy of a planned intervention, any image guidance system requires the capture of real-time imaging to constantly update the 3D planning. The critical importance of real-time image capture and corresponding real-time 3D planning is supported by the successful practices in some of the most investigated image-guided therapies for prostate cancer, including TRUS-guided focal cryosurgery and high-intensity focused ultrasound (HIFU) [33–35]. The initial 3D planning models for these technologies have been developed to be adjustable at any time during the intervention, based on the comparison of initially referenced images and real-time imaging. This capability is considered a key feature to achieving the precision and efficacy necessary for image-guided targeted focal therapy.

Registration of the reconstructed 3D planning prostate model to the rigid stereotactic navigation frame is the important computing process by which the coordinates of the 3D planning model are transformed to the coordinates of the stereotactic device. This step can be achieved very precisely by rigid registration or digitalized coincidence between the two spaces, the one with the other. Importantly, however, it is well-known that the prostate is a mobile organ, affected by the physiological movements of the pelvic organs such as the rectum and bladder [36, 37]. Actually, cine MRI is able to demonstrate real-time prostate movements related to the rectum, resulting in significant displacements of the prostate gland [38]. Delivery of the biopsy needle or therapeutic ablative probe needs to take into account these organ movements. In other words, the previously reconstructed 3D surgical planning model is becoming useless for real-time guidance in the real space, once prostate motion or deformation has occurred.

It is critical for precise delivery of needle or probe to ensure accurate and reliable localization of the intended target. If unwanted prostatic motion occurred during treatment, this may result in a geographical error impacting on the therapeutic effectiveness in terms of decreased cure rates. In order to avoid such errors, planning of therapeutic fields would have to have an adequate margin to encompass any possible movement of the intended target lesion in the prostate. One implication of possible prostate movement during treatment is that therapeutic contour margins may have to be increased in order to encompass such possible target displacements.

A recent innovative solution in registration of the 3D volume data for the soft tissue organ was the introduction of the “Elastic image-fusion” technique.

The Urostation™ system (Koelis, France) is an innovative navigation system of prostate biopsy and intervention with the introduction of the “real-time 3D TRUS” image to track every single prostate biopsy with the actual 3D space of the prostate as well as the introduction of “elastic-image fusion” technology [9, 39]. The major advantage of this system is to apply the real-time 3D TRUS probe to acquire real-time 3D volume data of the prostate. At each biopsy procedure in the use of the Urostation™, the3D prostate volume data are captured in real time to document a hyper-echoic needle trajectory in the real 3D space of the prostate at the time of biopsy firing. On the other hand, since in any other systems 3D prostate volume data are built up only once for the initial planning step by reconstruction out of multiple 2D images, the tracked biopsy location needs to be virtually overlaid on the initially reconstructed model, which may potentially cause registration error due to prostate motion or patient shift. Importantly, since every single prostate biopsy image taken by the Urostation™ is captured with its digitalized coordinates from the proximal (x1, y1, z1) and the distal end (x2, y2, z2) of the trajectory within the real 3D space of the prostate, the coordinates are available for future revisiting biopsies in active surveillance, or retargeting positive lesions in focal therapy (Fig. 9.3). As the real-time 3D TRUS probe can be manipulated free hand, the use of this system does not change the style of current urological practice at all. However, limitation of this system includes that the freehand manual use of the US probe could create error, if the US probe is not stabilized in the same spatial position, during image acquisition and registration. Therefore, future introduction of robotic handling of the US probe could potentially further improve the targeting accuracy, due to robotic stabilizing orientation of US probe during 3D image acquisition [40].

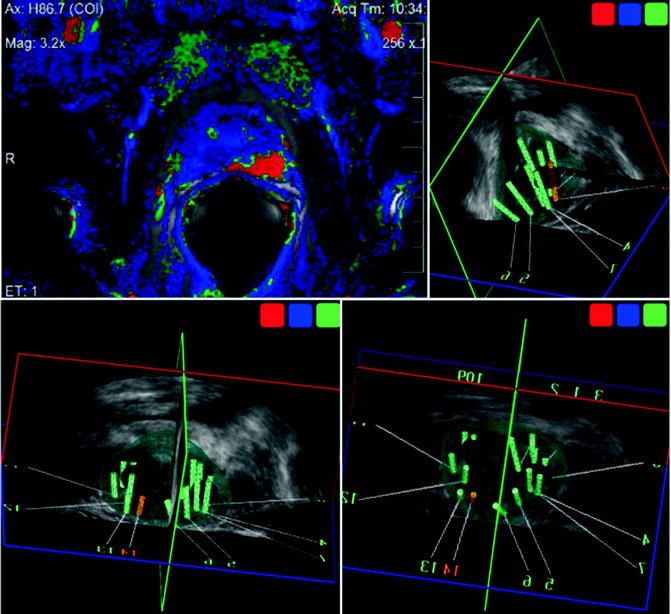

Fig. 9.3

Real-time 3D TRUS tracking of biopsy trajectories in real 3D space. The Urostation™ system (Koelis) is a real-time 3D TRUS-based navigation system of prostate biopsy and intervention to track real every single prostate biopsy with the actual 3D space of the prostate. Each biopsy trajectory was demonstrated in the 3D TRUS image of the prostate. The 12 systematic peripheral zone’s and two anterior transition zone’s biopsies were documented. Advantage of this system introduces “elastic-image fusion” technology of 3D volume data with another 3D volume data

A further unique ability of this system is the introduction of an elastic (nonrigid) image-fusion technique. For example, in MR/TRUS image fusion technology, the registration and fusion of two imaging modalities are roughly achieved by “rigid fusion,” where the integration of two corresponding images could be achieved by simple rotation and/or magnification. However, since most soft tissue organs such as the prostate do not conform to a rigid 3D shape between the preoperative and intra-operative fields, it has significant limitations when there are deformations to the 3D shape of the prostate between preoperative MR and real-time TRUS during the procedure. In contrast, “nonrigid (elastic) fusion” merges two corresponding images by the accommodation of some real-time local/partial stretching or deflection of the images. Elastic fusion allows real-time “elastic” deformation of the preoperative MR image mirroring changes in real-time 3D TRUS imaging. This feature is available in Urostation™, and it has great potential to enhance the precision of lesion-targeted biopsy and the intervention of image-visible prostate cancer (Fig. 9.4) [41].

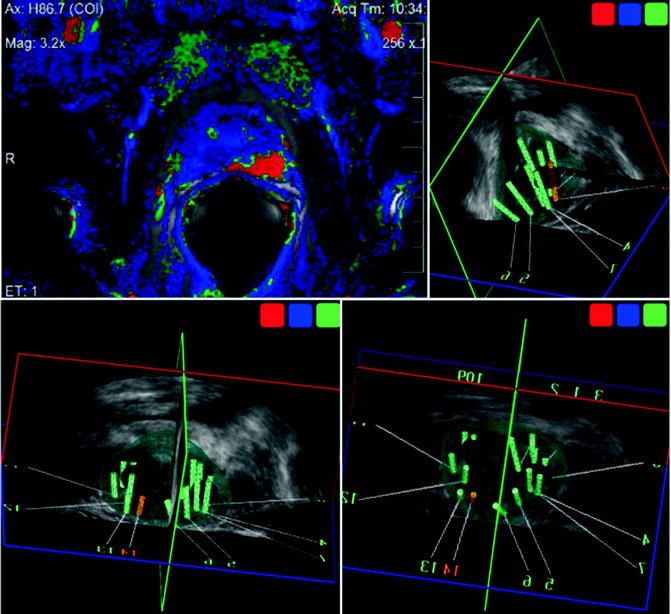

Fig. 9.4

MR/TRUS image fusion-guided target biopsy with elastic fusion technique. Elastic fusion allows real-time “elastic” deformation of the preoperative MR image mirroring changes in real-time 3D TRUS imaging to enhance the precision of lesion-targeted biopsy and the intervention of image-visible prostate cancer. Color-coded suspicious cancer area by MRI (shown as red spot in computer-assisted diagnosis; left-upper) was registered as a target (red sphere) and successfully targeted, according to the elastic image fusion of 3D volume data of MRI and 3D TRUS. The MR/US fusion-targeted biopsy revealed Gleason 4+3 cancer, with 11 mm cancer in length: 70 % of core length

Challenges in Prostate Intervention with Stereotactic Robotic System

Stereotactic biopsy or intervention is a technique to guide the insertion of the biopsy or ablative probe without direct visualization of the target, but with spatial orientation of the target using imaging. In order to design the system to control precision and reproducibility, a stereotactic frame or template for guidance may be used in relationship between the guidance and external landmarks of the human body. Since the prostate is a mobile organ, the real-time feedback of the intraoperative imaging of both the biopsy-needle and the prostate is essential. The freehand image-blind biopsy technique in current general urological practice has no ability to retarget the same spot with a previously sampled lesion precisely, which would be required in clinical practice for patients under active surveillance or potential candidates for focal therapy. The introduction of the robot potentially provides reliable delivery to the target, because the robot potentially provides a faster, automated, precise, and reproducible navigation system. It is important to be supported by real-time feedback from the intraoperative imaging. The ideal image-guided robot would require multiple steps in a single platform of the sophisticated stereotactic guidance system with the integration of a computer-controlled robot, fully interfaced with the 3D image planning workflow that can be updated by real-time imaging. The future image guidance system would interactively follow the intraoperative image guidance and also the nonrigid image registration between the preoperative 3D planning model and the intraoperative image.

A number of issues are under research in the development of a reliable stereotactic robot for practical use. MRI, CT, or TRUS are modalities of integrated image guidance, with their own advantages and disadvantages for prostate intervention. Engineering challenges included how to fit the mechanical device to the specific environment of the used image guidance.

The earliest intervention robot for the prostate was developed in 2000–2001 using 1.5 T MR guidance, having five-degrees-of-freedom rigid arms for needle holding, made from a titanium alloy and plastic, and driven by nonmagnetic ultrasonic motors [42, 43]. The system navigated the robotic needle delivery of prostate biopsy and radioactive seeds. The preoperative T2-weighted image was obtained with the patient in the supine position using an endorectal coil, while the intraoperative T1-weighted image was obtained in the lithotomy position using a rectal obturator coil. Therefore, the difference of the prostate shape between the pre- and intraoperative images required nonrigid registration methods [44]. The initial prototype robot using CT guidance consisted of a seven-degrees-of-freedom passive mounting arm, a remote center-of-motion robot, and a motorized needle-insertion device in order to achieve stereotactic accurate and consistent placement of transperineal needles into the prostate with intraoperative CT-image guidance [45]. Historically, the incorporation of a CT scan into the stereotactic technique was parallel to the advances in technology in brain surgery since the 1980s [46]. Although a brain tumor is visible in CT, a prostate lesion is rarely so. The obvious reasons for using TRUS or MRI for prostate image guidance include the following: first, TRUS or MRI can orientate prostate 3D volume data to be used for the 3D planning model for prostate needle delivery; second, with TRUS or MRI, the prostate cancer lesion can be visualized; and third, TRUS or MRI can be used for real-time guidance for tracking the trajectory and also for real-time monitoring the therapeutic intervention such as cryoablation, or high-intensity ultrasound. Both imaging modalities allow 3D imaging, oblique sectioning, excellent soft tissue contrast, and functional information.

The major challenge for an MR-compatible robot was the issue of the development of metal-free biopsy equipment, especially the motor unit for automated delivery of the needle. Since the MR environment is a significant challenge for the engineer to develop an MR-compatible biopsy device, an entirely new system is required, including needle delivery manipulator or motor using special materials with very low magnetic susceptibilities. The concerns included whether the presence and motion of a robot may affect the distortion of the homogeneity of the generated magnetic field, and also affect the image signal-to-noise ratio.

Recently, various prototype MR-compatible prostate interventional robots have been developed [47–53]. Initially, an open 0.5 T MR intervention unit made it possible to perform prostate biopsy and brachytherapy under MR guidance [47]. Stoianovici et al. introduced a pneumatic, fully actuated robot located within the MR scanner alongside the patient and operating under remote control based on the image feedback [50]. Tokuda et al. [51] reported the original software for needle guidance to provide interactive target planning, 3D image visualization with current needle position, and treatment monitoring through real-time MR images of needle trajectories in the prostate. Recently, Yakar et al. [53] reported clinical feasibility in ten patients with suspicious areas detected by multiparametric MR, followed by transrectal biopsy with a pneumatically actuated 3-T MR-compatible robot. Zangos et al. [52] also reported clinical experience (n = 20) using a transgluteal approach in patients with suspicious prostate lesions with an MR-compatible pneumatically driven robotic system.

A TRUS-guided brachytherapy robot has demonstrated significant impact on the development of a prostate interventional system [54–58]. Robot-assisted needle insertion can significantly improve accuracy and consistency of prostate intervention [59, 60].

During needle insertion and retraction into tissue with elastic properties, tissue deformation will occur over 0–30 mm, which will limit the accuracy of needle placement [61, 62]. Although locking needles may reduce the prostate motion [63], it is necessary to be able to predict the changes in prostate position and shape at the time of delivering the needle or probe at the desired position. It was reported that although only rotation in the coronal plane can be reduced by the use of locking needles, rotation in the sagittal plane cannot be predicted or just reduced by the use of locking needles [61].

Interestingly, increasing needle insertion velocity decreased the tissue deformation during needle insertion due to lower friction forces [56]. In order to be able to insert the needle with a high velocity, one of the solutions is the introduction of automated delivery by a robotic system. Lagerburg et al. reported that prostate motion was significantly decreased from 5.6 mm (range 0.3–21.6) to 0.9 mm (range 0–2.0) when a robotically controlled tapping technique was used compared to when the needle was manually pushed [56].

The accuracy of needle targeting would depend on various factors, such as needle reflection, shift or motion of the prostate during needle insertion, prostate deformation due to edema or bleeding, and also the choice of needle type, insertion velocity, the usefulness of a rotating needle or rotation speed. High velocity in the robotic delivery of the needle may also minimize needle reflection, improving accuracy. Completion of the entire procedure in a short time may minimize the effects of prostate deformation due to edema or bleeding.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree