Introduction

Neurodegenerative diseases usually sneak up quietly — by the time symptoms show, the brain has already gone through significant changes. That’s why spotting early signs is so important. AI for brain CT is helping with this. It doesn’t give a diagnosis on its own, but it can flag tiny details that the human eye might miss, providing doctors with extra information to work with.

Modern algorithms are already able to pick up subtle shifts in brain structure or small changes in tissue density long before a patient notices anything. And the best part? AI tools for this purpose are becoming more approachable.

Why CT Remains a Key Tool in Diagnostics

To understand the role of artificial intelligence, it is important to clearly understand what a brain CT scan itself provides

Computed tomography produces highly detailed images of both bone structures and soft tissues. It is traditionally used to detect hemorrhages, tumors, or traumatic brain injuries. However, recent studies show that with proper processing, CT can also support much earlier detection of neurodegenerative changes.

For example, mild atrophy in specific cortical regions or subtle asymmetries between brain structures can sometimes be detected several years before clinical symptoms appear. These faint early markers are exactly the kind of signals that AI can identify.

How AI for Brain CT Works: A Simple Explanation

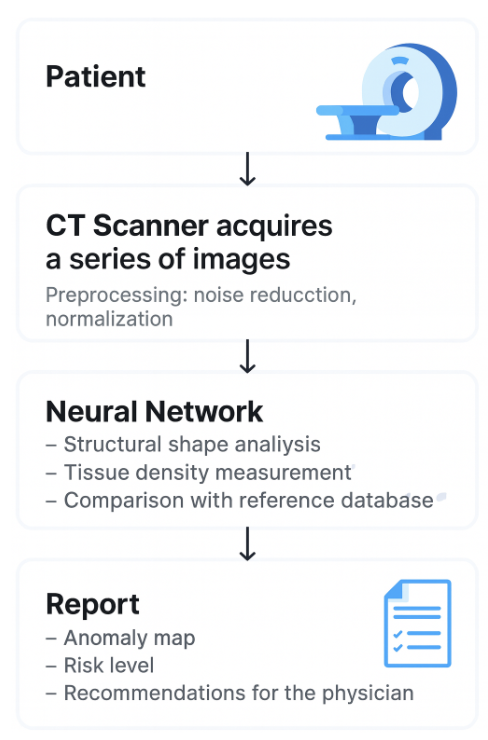

To keep things straightforward, let’s break the process down into three steps and see how each contributes to early diagnosis.

1. Data Collection

The algorithm receives brain images — hundreds or even thousands of slices. These can come from clinical sources or specialized datasets used by researchers, such as brain CT dataset, which provides high-quality annotated scans for testing and training AI models.

Role in Early Diagnosis:

- Large datasets allow the AI to learn normal brain anatomy across different ages, genders, and health conditions.

- By comparing a new patient’s scans to thousands of reference scans, AI can detect subtle deviations that may indicate early disease, even before clinical symptoms appear.

2. Pattern Recognition

The model analyzes each image to identify patterns, such as:

- Reduced tissue density in the hippocampus or basal ganglia

- Microcalcifications

- Slight asymmetry between brain regions

- Enlargement of the ventricular system

Role in Early Diagnosis:

- AI can detect microstructural changes that are invisible to the human eye.

- These early patterns may precede cognitive or motor symptoms by years.

- By flagging these subtle deviations, AI highlights patients who may benefit from early monitoring or preventive interventions.

Example:

For Alzheimer’s disease, AI can quantify hippocampal atrophy across hundreds of slices, identifying patients at risk of progression before memory problems become noticeable. For Parkinson’s, subtle calcification patterns in the basal ganglia can indicate early neurodegeneration before tremors or rigidity occurs.

3. Output Generation

AI can then provide:

- A probability score for a potential disease

- Visual highlighting of areas of concern on the scans

- A classification of risk level (low, moderate, high)

Role in Early Diagnosis:

- These outputs give clinicians actionable insights immediately.

- Visual maps of risk areas allow doctors to focus on subtle changes that would otherwise be missed.

- Risk classification helps prioritize patients for follow-up, lifestyle intervention, or early therapy.

Practical Benefit:

Even if a patient currently shows no symptoms, AI can alert clinicians to the earliest structural changes. This proactive approach enables early interventions that may slow disease progression, improve outcomes, and reduce long-term healthcare costs.

How AI Helps with Neurodegenerative Diseases

Neurodegenerative diseases, such as Alzheimer’s, Parkinson’s, and Huntington’s disease, develop slowly over many years. Early symptoms are often subtle and easily overlooked, including minor memory lapses, slight changes in movement, or small cognitive declines. By the time these signs become noticeable, significant damage may already have occurred in the brain.

This is where AI for brain CT becomes a game-changer. Modern algorithms can detect patterns that are invisible to the human eye, allowing clinicians to identify neurodegenerative processes long before obvious symptoms appear.

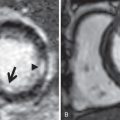

1. Alzheimer’s Disease

One of the first areas affected by Alzheimer’s disease is the hippocampus, a brain structure responsible for memory and spatial orientation. In the early stages, the disease causes slight atrophy in this region, which is difficult to detect with the naked eye on CT scans.

Role of AI:

- Algorithms can measure hippocampal volume with submillimeter precision.

- They track the density of gray and white matter, detecting subtle changes that may indicate early cognitive decline.

Practical Example:

By analyzing 300 CT scans of patients with mild cognitive impairment, a model can identify micropatterns in white matter changes. This allows clinicians to pinpoint patients at higher risk of progressing to dementia long before noticeable symptoms appear, enabling early intervention and therapy adjustments.

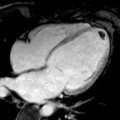

Based on the study, CT‑based volumetric measures obtained through deep learning: Association with biomarkers of neurodegeneration, the authors trained a neural network on large sets of CT and comparable MRI scans.

The results showed that the CT-derived volumetric measures (CTVMS) clearly distinguished patients with dementia (including prodromal dementia) from healthy controls. The model automatically segmented brain tissues and calculated six volumetric parameters (CTVMS), including total brain volume, gray matter volume, cerebrospinal fluid volume, and others.

2. Parkinson’s Disease

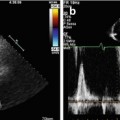

Although MRI is generally more sensitive for diagnosing Parkinson’s disease, CT scans can also provide useful information. One key marker is mineral metabolism, such as calcification in specific brain regions.

Role of AI:

- Algorithms analyze tissue density and identify patterns of calcification that are difficult to see visually.

- They can compare these changes with patient databases to assess the risk of disease progression.

Practical Example:

AI can process hundreds of CT slices and detect patterns that correlate with the severity of motor symptoms, helping physicians make more informed decisions about early treatment strategies.

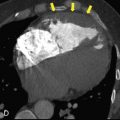

3. Chronic Vascular Changes

Microinfarcts, tissue density changes, and peripheral atrophies are common signs of chronic vascular damage in the brain. These changes are particularly difficult to monitor manually during regular check-ups.

Role of AI:

- Lesion counting: The algorithm automatically identifies the number of microinfarcts and other small pathologies.

- Monitoring progression: It compares a series of scans for the same patient over time to track disease progression or stabilization.

- Study comparison: Algorithms match new scans with patient history and databases of similar cases to detect atypical patterns and predict possible complications.

Practical Example:

If a patient has a series of CT scans from the past 3–5 years, AI can generate a “change map” of the brain’s vascular system, visually highlighting areas of highest risk and providing physicians with a clear tool for treatment planning.

4. Huntington’s Disease

Huntington’s disease primarily affects the basal ganglia, a brain region involved in movement control. Early structural changes can include atrophy of the caudate nucleus and putamen, which are subtle and often missed on standard CT scans.

Role of AI:

- Algorithms measure volumes of the basal ganglia with high precision.

- They detect subtle tissue density changes and progressive atrophy over time.

- AI can track motor-related structural changes, even before clinical motor symptoms become apparent.

Practical Example:

By analyzing longitudinal CT scans of patients with a genetic predisposition to Huntington’s, AI can identify early patterns of atrophy, helping neurologists recommend early interventions and monitor disease progression.

5. Multiple Sclerosis (MS)

Multiple sclerosis causes demyelination in the white matter of the brain and spinal cord. While MRI is the primary imaging method, CT can capture calcified lesions or secondary structural changes.

Role of AI:

- Detects and counts white matter lesions automatically.

- Monitors the development or regression of lesions over time.

- Compares scans to databases to assess the risk of relapse or progression.

Practical Example:

In patients with early MS, AI can quantify lesion load across dozens of CT slices, providing a visual and numerical map of disease activity. This assists physicians in adjusting therapies more precisely.

What AI Can Do vs. What a Physician Can Do

The table below provides an overview of how physicians and AI handle different tasks in brain CT scan analysis. Its purpose is not to judge superiority, but to illustrate how their strengths are complementary. A physician contributes clinical judgment, contextual understanding, and direct communication with the patient. AI, on the other hand, can process vast volumes of data in seconds and detect subtle patterns that might be overlooked. Together, they form a synergistic partnership that covers a much broader spectrum than either could achieve alone.

| Task | Physician | AI | Explanation |

| Rapid visual comparison of scans | Excellent | Excellent | A physician can evaluate one or a few scans, while AI can quickly visualize and annotate anomalies, helping the doctor avoid missing subtle details. |

| Comparison of 10,000+ scans in seconds | No | Yes | It is physically impossible for a human to process tens of thousands of images in a short time. AI can handle large volumes of data almost instantly, which is useful for large-scale studies or historical analyses. |

| Detection of micropatterns at the pixel level | Limited | Excellent | Some changes at the pixel level are too small for the human eye, but deep learning algorithms can detect them, such as microatrophy or small calcified regions. |

| Handling large datasets and statistics | Moderate | Excellent | A physician relies on experience and knowledge, but manually analyzing thousands of historical cases is challenging. AI can compare current data against vast databases and identify rare patterns. |

| Clinical decisions, diagnosis, patient communication | Yes | No | Only a physician can make the final diagnosis, prescribe treatment, and communicate with the patient. AI supports the process but does not replace human expertise and empathy. |

Conclusion

AI for brain CT is becoming a genuinely useful tool for spotting the earliest signs of neurodegenerative diseases — changes that were almost impossible to notice during a routine scan. It picks up subtle patterns across the brain, like small shifts in the ventricles, slight thinning of the cortex, or tiny microvascular changes, and turns them into insights doctors can actually use. Instead of giving a simple yes or no, it highlights areas that deserve closer attention, helping clinicians decide who needs a follow-up or more detailed testing.

The real value comes from combining good data with smart processing. Clean, well-labeled datasets, careful preparation of scans, and transparent model outputs make the difference between a system that’s genuinely helpful and one that just produces confusing numbers. With these in place, CT scans can become more than emergency or secondary tools — they can serve as an early-warning system, giving doctors a head start before symptoms even appear.

Ultimately, AI isn’t here to replace doctors — it’s here to give them sharper eyes and more confidence.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree