Image Quality

4.0 INTRODUCTION

At a fundamental level, image quality is about diagnostic accuracy, which is a difficult quantity to measure. Because of this, most image quality measures are based on surrogate measures that are linked, by assumption, to task performance. Optimal image quality typically requires balancing image resolution, contrast, and noise along with other considerations such as dose or cost. The results of image quality assessments are typically a ratio measure (CNR or SNR) or a performance curve (contrast-detail or ROC).

4.1 SPATIAL RESOLUTION

Spatial resolution describes the level of detail that can be seen on an image with low-resolution images appearing “blurry” and high-resolution images appearing “sharp.”

1. Physical mechanisms of blurring

a. X-ray focal spot blur

b. Positron diffusion

c. Optical diffusion in a phosphor-based detector

d. Image processing for noise control—smoothing operations that employ averaging

2. Convolution—a mathematical way to describe blurring

a. Involves a blurring kernel that integrates over position (Fig. 4-1)

b. Assumes that the blurring operation is shift-invariant

c. Can be used to smooth noisy data (Fig. 4-2)

d. Smoothing can reduce the effects of noise but can also blur fine structure in images

e. Convolution formula (see Fig. 4-1 caption for description of equation variables):

▪ FIGURE 4-2 A plot of the noisy input data in H(x), and of the smoothed function G(x), where x is the pixel number (x = 1, 2, 3, …). The first few elements in the data shown on this plot correspond to the data illustrated in Figure 4-1. The input function H(x) is random noise distributed around a mean value of 50. The convolution process with the boxcar average results in substantial smoothing, and this is evident by the much smoother G(x) function that is closer to this mean value.

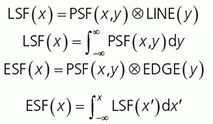

3. The spatial domain: spread functions

a. Blurring can be characterized by a point-spread function (PSF) (Fig. 4-3), a line-spread function (LSF) (Fig. 4-4), or an edge-spread function (ESF), as compared in Figure 4-5.

b. These are generated by imaging a point, a slit (line), or an edge (Fig. 4-5).

c. Integral relationships exist between each of these spread functions.

4. The frequency domain: modulation transfer function

a. Fourier analysis describes how a signal variation in the spatial domain can be approximated by sine waves assembled with a spatial frequency, phase, and amplitude as shown in Figure 4-6.

b. The Fourier transform converts an image from the spatial domain to the frequency domain.

c. The Fourier transform of a sinusoidal wave function is an impulse at the wave frequency.

d. The period of a wave is the distance of one cycle; for example, period (mm)= 1/ frequency (cycles/mm).

e. Fine-scale image structure is typically reflected by high frequency content in the Fourier transform.

f. The modulation transfer function (MTF) describes how sine-wave components are modulated by an imaging system (Fig. 4-7).

g. Sampling describes the mathematical process of going from a continuous function to finite set of measured values; sampling aperture, a, and sampling pitch, Δ, are described (Fig. 4-8).

h. Sampling at a fixed pixel pitch leads to fundamental limits on the resolution of the sampled signal.

i. Nyquist frequency = 1/2Δ (at least two samples per cycle of the input signal) is the maximum frequency that can accurately rendered in the image; when violated, aliasing occurs (Fig. 4-9).

j. Frequencies larger than the Nyquist frequency are reflected as lower frequencies (Fig. 4-10).

4.2 CONTRAST RESOLUTION

Contrast resolution refers to the ability to render subtle differences in displayed image grayscale. Contrast, CS, is defined in terms of a background intensity, S0, and a target intensity, S1:

Contrast is evaluated at multiple stages during imaging (subject contrast, detector contrast and image contrast)

1. Subject contrast is the contrast in the signal after interacting with the patient (Fig. 4-11).

▪ FIGURE 4-11 Projection radiography example illustrates the uniform incident beam upon the patient. X-rays interact with the tissues through various mechanisms (Chapter 3) resulting in a greater differential attenuation caused by a structure (B) compared to the attenuation of the surrounding tissue (A). In this case, B (e.g., bone) is more attenuating than A (e.g., soft tissue), and S0>S1, thus contrast ranges from 0% to 100%. For less attenuating structures (e.g., air pockets), negative contrast is also possible. Subject contrast also has intrinsic and extrinsic factors that relate to anatomical or functional changes—intrinsic from physical or physiological properties such as a pulmonary nodule in a lung—extrinsic from acquisition protocols (e.g., changing to lower kV to emphasize ribs for a rib radiograph, or to higher kV to de-emphasize the ribs for a chest x-ray), or introducing iodinated contrast agent to identify the location of the vasculature or perfusion uptake in an organ. F. 4-17

F. 4-17

2. Detector contrast transduces the incident signal through many possible processes that impact the contrast of structures of interest (Fig. 4-12).

3. Image contrast

a. Capture of digital image data allows for interactive adjustment of image contrast through window/level manipulation and lookup table (LUT) conversion (Fig. 4-13).

b. Medical images have bit depths of 8, 10, 12, and 14 bits, representing possible shades of gray equal to 2#bits, delivering 256, 1,024, 4,096, to 16,384 levels.

c. Display monitors typically render 8 or 10 bits of luminance resolution (256 to 1,024 shades).

4.3 NOISE AND NOISE TEXTURE

Noise in images arises from various random processes in the process of generating an image (e.g., quantum noise, anatomical noise, electronic noise, structured noise).

1. Sources of image noise

a. Quantum noise describes randomness in a signal arising from randomness in the number of photons.

Poisson distribution (e.g., counts) for an expectation of Q counts is characterized by mean value of y, µy, and variance in y, where .

.

Standard deviation, σy, is the square root of the variance.

Coefficient of variation, the mean divided by std. dev. = σy/µy = 1/[check mark]Q.

Fluctuations in the randomness are larger when fewer photons are generated.

Lower dose procedures, therefore, have greater quantum noise.

Alternatively, signal to noise ratio (SNR) = µy/σy = [check mark]Q; thus, SNR is lower in low-count regimes and the coefficient of variation is larger.

b. Anatomic noise describes contrast generated by patient anatomy not important for diagnosis.

Processing such as dual-energy subtraction can selectively remove interfering anatomy.

c. Electronic noise describes analog or digital signals in the imaging chain that contaminate signals.

Cooling detectors can reduce detector thermal noise, as can double-correlated sampling.

d. Structured noise describes a reproducible pattern that reflects differences in the local gain or offset of digital detector components—“flat-field” correction algorithms remove structured noise.

2. Mathematical characterizations of noise

a. Noise magnitude is typically quantified by the variance (σ2) or standard deviation (σ).

b. Noise texture is typically quantified by the noise power spectrum, based on autocovariance function.

c. Autocovariance function represents the covariance between two pixels. For pixels that are the same, the covariance is the variance.

e. Noise power spectrum (NPS) is the Fourier transform of the autocovariance function; it describes the contribution of different spatial frequencies to the image noise (Fig. 4-16).

▪ FIGURE 4-15 The panel shows autocovariance functions for the different noise textures in Figure 4-14. The central point in each autocovariance function is the pixel variance, which is identical for all three textures. However, moving away from the center, covariance in the highpass-noise textures (A) is seen to oscillate to zero (uniform gray). In the white noise textures (B), covariance immediately drops to zero. In the lowpass noise textures (C), covariance slowly and uniformly decays to zero.

F. 4-24 F. 4-24Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree

Get Clinical Tree app for offline access

Get Clinical Tree app for offline access

|