Adherence to agreed-upon practice guidelines is another performance indicator that can be measured for each radiologist. For example, a radiology practice can agree to use the Fleischner Society’s published guidelines for management of incidentally detected small lung nodules,9 and adherence to and appropriate use of these guideline can be measured for each radiologist as a component of peer review. Variability in recommendations for managing imaging findings can be confusing to referring physicians and patients.

Radiologist performance can also be evaluated by soliciting feedback from colleagues, trainees, staff, and patients. Specific attributes such as communication skills, professionalism, and “good citizenship” within a department can be assessed. A summary of personal evaluation and review of patient or staff complaints or commendations can be included as a component of a professional practice evaluation in addition to clinical skills.

For a peer-review process to be effective, it should be fair, transparent, consistent, and objective. Conclusions should be defensible, and various opinions should be included. Peer-review activities should be timely, result in useful action, and provide feedback through auditing6,10.

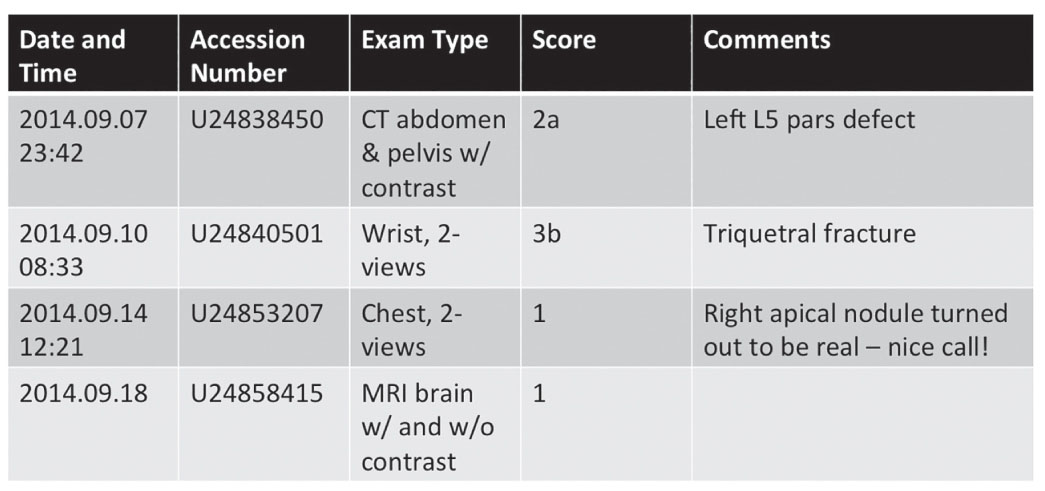

FIG. 11.1 ● A sample report of peer-review reporting system showing the type of examination, score given, and reviewer’s comments.

METHODS OF PEER REVIEW IN RADIOLOGY

Retrospective Peer Review

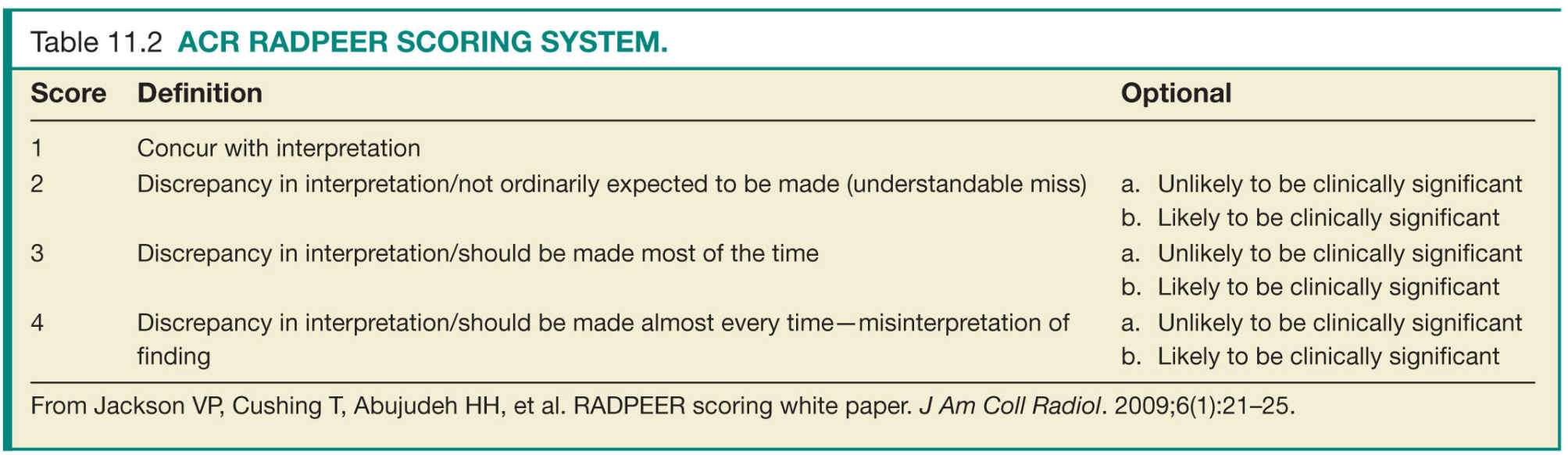

Peer review in radiology is primarily conducted in a retrospective manner because digital archiving of diagnostic imaging tests and accompanying interpretative reports makes retrospective peer review easy to perform and available to nearly all practicing radiologists. In retrospective peer review, the radiologist interpreting the current study reviews a previously interpreted comparison examination of the same patient with its accompanying report and assigns a score that typically reflects agreement or various levels of disagreement. Some scoring systems also flag disagreements or subdivide scores into those that are clinically relevant and those that are not (Table 11.2).4 When a disagreement in interpretation occurs, the reviewer can provide feedback to the original interpreting radiologist through various processes. Examinations can be identified for peer review randomly or during review for multidisciplinary conferences or consultation.

Although relatively easy to implement and perform, the retrospective case review model of peer review has received the most scrutiny for its inherent limitations.6,11,12 One significant weakness is the lack or potential lack of randomness. For example, one radiologist may opt to review less complex studies or apparently normal studies to minimize time and energy spent on peer review. One approach to improving randomness of peer review is to assign for review the first case encountered with a relevant comparison on any given day. Additionally, specific numbers of peer reviews by modality or body part can be required. Optimally, integrating software applications into the radiologist workflow can reduce the time demands on radiologists as well as improve random sampling. Cases can be randomly selected for review from the picture archiving and communication system (PACS), radiology information system (RIS), or the voice recognition (VR) software database and assigned to an appropriate radiologist for review. Progress of reviews can be tracked, and results can be recorded in a dedicated peer-review database (protected by local peer-review statutes, if applicable). Finally, notifications can be sent to radiologists whose cases were reviewed with the results of those reviews.

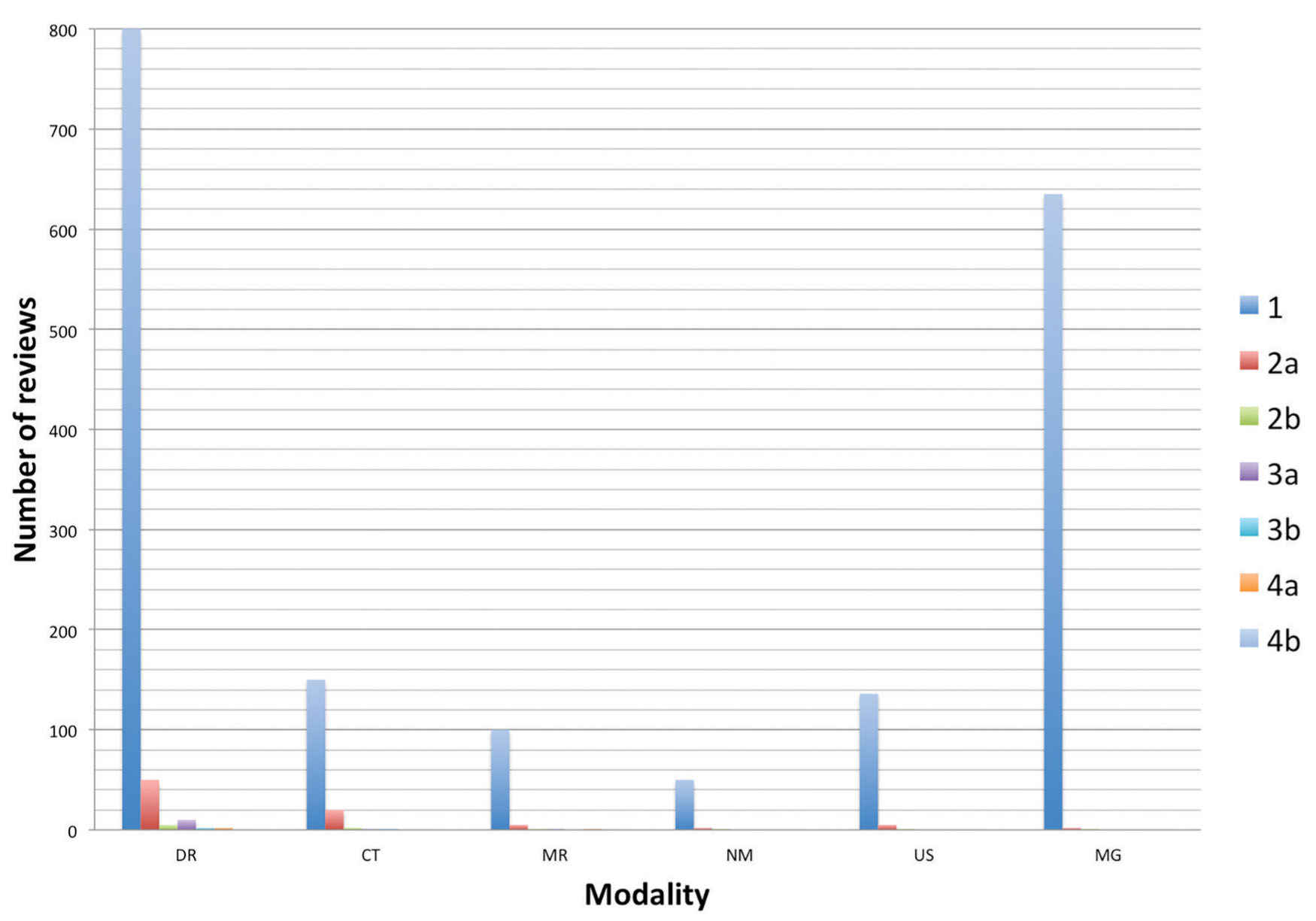

FIG. 11.2 ● Graph of sample peer-review data showing distribution of scores by modality.

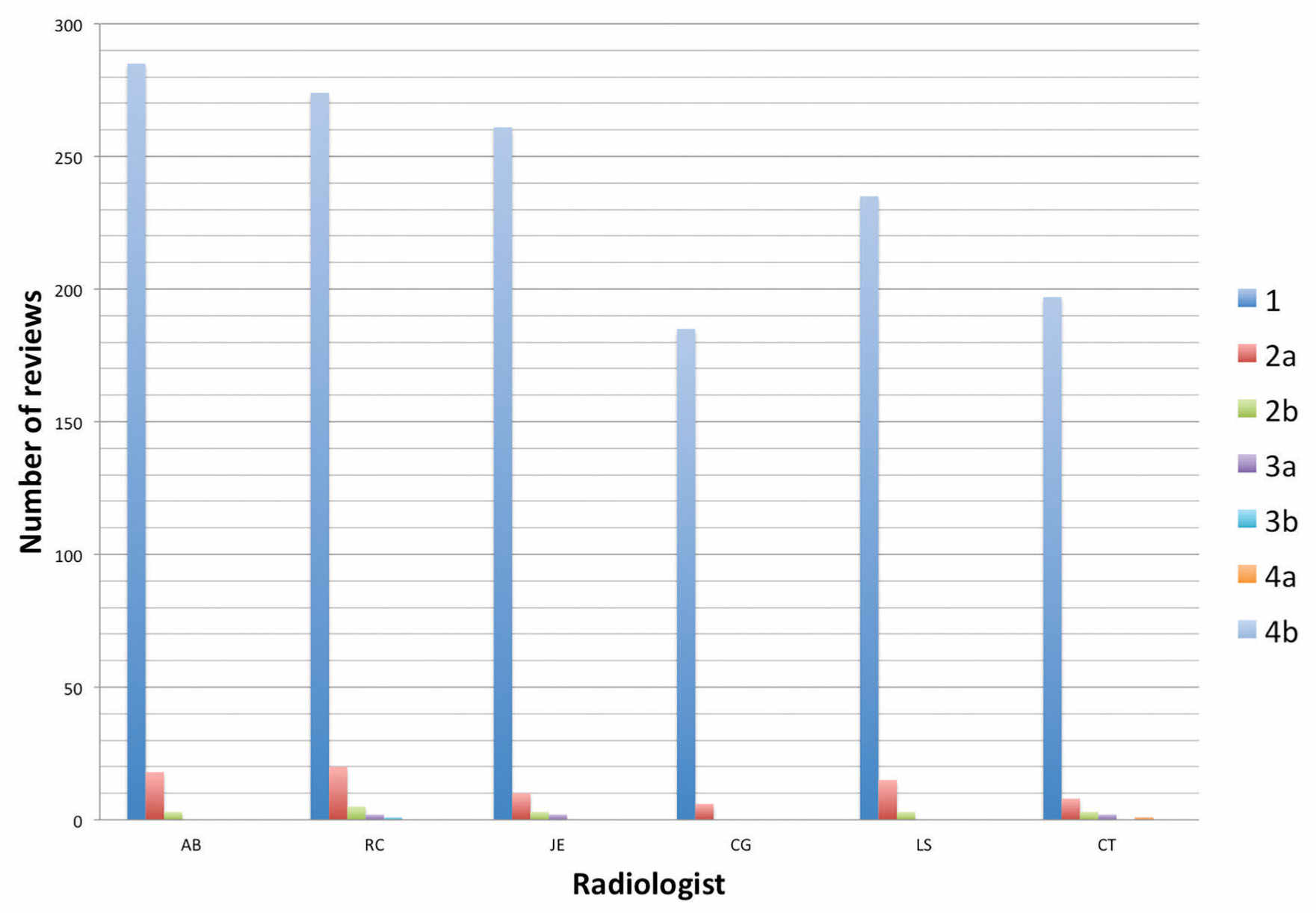

FIG. 11.3 ● Graph of sample peer-review data showing distribution of scores by radiologist.

Another major criticism of retrospective case review is that anonymity of reviewer and reviewee is frequently compromised. For some practices, this may become an obstacle for objective peer review. For others, a transparent peer review may be a cultural norm. Because reviewers can easily identify the interpreting radiologist of the case under review, conscious or unconscious biases may affect the choice to review as well as the score assigned. Likewise, reviewees can usually identify the radiologist performing peer review simply by finding out who interpreted the subsequent relevant study. For example, a junior faculty member reviewing an abdominal ultrasound interpreted by the division chief or department chair may be inclined to assign a score of “agree,” assuming the more experienced radiologist in a leadership role is correct or for fear of potential retribution.

A third significant criticism of retrospective peer review is that the time between when the original interpretation was made and when peer review was performed may be sufficiently long that harm from a misinterpretation could result in an adverse outcome for the patient. A simple solution to this problem is to establish a maximum time period between the current examination and the comparison examination being used for peer review.13 However, adding this criterion across the board will likely narrow the pool of eligible studies for peer review and has the potential to overemphasize inpatient examinations and radiography. A more prudent approach is to define different maximum time frames for each modality on the basis of their respective relative frequencies across a practice to provide a larger-enough pool of exams for review while maintaining meaningful value in peer review.

Practice Auditing

Practice auditing is another method to retrospectively perform peer review in radiology. In this method, image interpretation is compared with a reference standard such as pathologic data, operative findings, or clinical follow-up. The growth of electronic health records (EHR) has made access to comparative data easier, thus facilitating this type of case review. Advantages of professional auditing include incorporating some objective data into the peer-review process and improving performance through correlation of imaging findings with outcomes or operative or pathologic findings. Furthermore, radiologists are encouraged to increasingly use the EHR as part of professional practice. Upfront agreement on types of imaging studies, disease categories, and subspecialties to target is essential to successfully implement professional auditing. Moreover, reliable comparative data such as pathology reports and operative notes must be easily accessible.

One major deficiency of practice auditing is the limited scope of cases that can be reviewed. For example, the overwhelming majority of small lung nodules are benign, and so failure to detect a small nodule on chest CT may go unnoticed if the patient does not undergo any further evaluation. Additionally, an overlooked subsegmental pulmonary embolism could result in no adverse clinical outcome and would not be identified by peer review. A second deficiency of practice auditing is that the defined “reference standard” is assumed to be accurate. Comparing neck CT angiography results with conventional angiography would seem to be a useful benchmark for a practice audit. However, subtle abnormalities can be overlooked on conventional angiography and, because of the two-dimensional nature of conventional angiographic images, lesion severity can be over- or underestimated. A third example is distinguishing between restrictive and constrictive cardiac physiology. Echocardiography, angiography, or clinical evaluation may diagnose restrictive cardiomyopathy whereas cardiac magnetic resonance imaging (MRI) clearly shows constrictive pericarditis.14

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree