Digital Imaging Characteristics

Objectives

On completion of this chapter, you should be able to:

• Differentiate between analog and digital images.

• Define pixel and image matrix and characteristics of each.

• Relate pixel size, matrix size, and field of view (FOV) to each other.

• Discriminate between standard units of measure for exposure indicators.

• Discuss the differences between spatial resolution and contrast resolution.

Key Terms

Air kerma

Analog

Brightness

Contrast resolution

Detective quantum efficiency (DQE)

Deviation index (DI)

Digital

Dynamic range

Field of view (FOV)

Indicated equivalent air kerma (KIND)

Latitude

Matrix

Modulation transfer function (MTF)

Noise

Noise power spectrum (NPS)

Pixel

Pixel bit depth

Signal-to-noise ratio (SNR)

Spatial resolution

Standardized radiation exposure (KSTD)

Target equivalent air kerma value (KTGT)

In medical imaging, there are two types of images: analog and digital. Analog images are those types of images that are very familiar to us, such as paintings and printed photographs. What we are seeing in these images are the various levels of brightness and colors; the images are continuous; that is, they are not broken into their individual pieces. Digital images are recorded as multiple numeric values and are divided into an array of small elements that can be processed in many different ways.

Analog Images Versus Digital Images

When we talk about digitizing a signal from a digital radiographic unit, we are talking about assigning a numerical value to each signal point, either an electrical impulse or a light photon. As humans, we experience the world through analog vision. We see our surroundings as infinitely smooth gradients of shapes and colors. Analog refers to a device or system that captures or measures a continuously changing signal. In other words, the analog signal wave is recorded or used in its original form. A typical analog device is a watch in which the hands move continuously around the face and is capable of indicating every possible time of day. In contrast, a digital clock is capable of representing only a finite number of times (every tenth of a second, for example).

Traditionally, radiographic images were formed in an analog fashion. A cassette, containing fluorescent screens and film sensitive to the light produced by the screens, is exposed to radiation and then processed in chemical solutions. Today, images can be produced through a digital system that uses detectors to convert the x-ray beam into a digital image.

In an analog system such as film/screen radiography, x-ray energy is converted to light, and the light waves are recorded just as they are. In digital radiography, analog signals are converted into numbers that are recorded. Digital images are formed through multiple samplings of the signal rather than the one single exposure of an analog image.

Characteristics of a Digital Image

A digital image begins as an analog signal. Through computer data processing, the image becomes digitized and is sampled multiple times. The critical characteristics of a digital image are spatial resolution, contrast resolution, noise, and dose efficiency (of the receptor); however, to fully grasp how a digital image is formed, an understanding of its basic components is necessary.

Pixel

A pixel, or picture element, is the smallest element in a digital image. If you have ever magnified a digital picture to the point that you see the image as small squares of color, you have seen pixels (Figure 2-1).

Spatially, the digital image is separated into pixels, with discrete (whole numbers only) values. The process of associating the pixels with discrete values defines maximum contrast resolution.

Pixel Size.

The size of the pixel is directly related to the amount of spatial resolution or detail in the image. For example, the smaller the pixel is, the greater the detail. Pixel size may change when the size of the matrix or the FOV changes.

Pixel Bit Depth.

Each pixel contains pieces or bits of information. The number of bits within a pixel is known as pixel bit depth. If a pixel has a bit depth of 8, then the number of gray tones that pixel can produce is 2 to the power of the bit depth, or 28 or 256 shades of gray. Some digital systems have bit depths of 10 to 16, resulting in more shades of gray. Each pixel can have a gray level between 1 (20) and 65,536 (216). The gray level will be a factor in determining the image contrast resolution.

Matrix

A matrix is a square arrangement of numbers in columns and rows, and in digital imaging, the numbers correspond to discrete pixel values. Each box within the matrix also corresponds to a specific location in the image and corresponds to a specific area of the patient’s tissue. The image is digitized both by position (spatial location) and by intensity (gray level). The typical number of pixels in a matrix ranges from about 512 × 512 to 1024 × 1024 and can be as large as 2500 × 2500. The size of the matrix determines the size of the pixels. For example, if you have a 10 × 12 and a 14 × 17 computed radiography (CR) cassette and both have a 512 × 512 matrix, then the 10 × 12 cassette will have smaller pixels.

Field of View

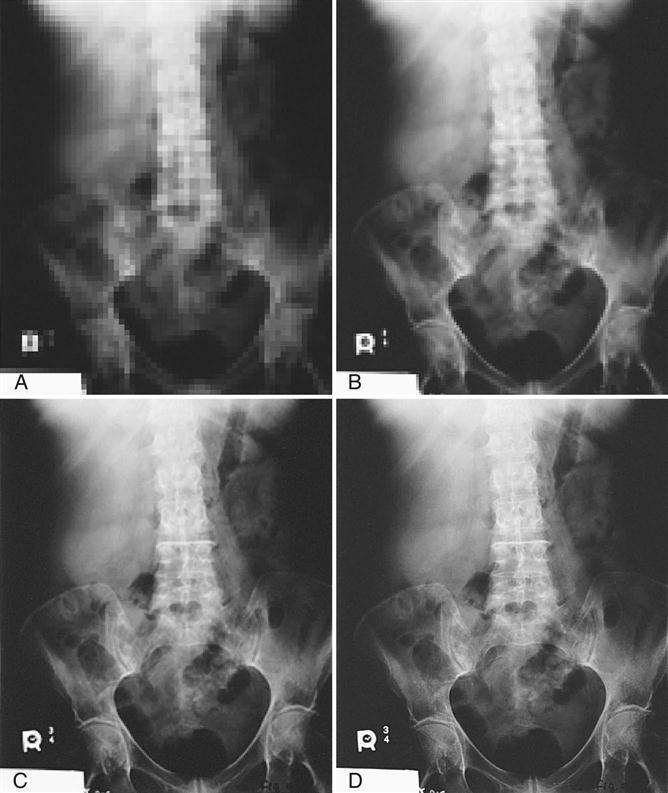

The term field of view, or FOV, is synonymous with the x-ray field. In other words, it is the amount of body part or patient included in the image. The larger the FOV, the more area is imaged. Changes in the FOV will not affect the size of the matrix; however, changes in the matrix will affect pixel size. This is because as the matrix increases (e.g., from 512 × 512 to 1024 × 1024) and the FOV remains the same size, the pixel size must decrease to fit into the matrix (Figure 2-2).

Pixel Size, Matrix Size, and FOV

A relationship may exist between the size of the pixel, the size of the matrix, and the FOV. The matrix size can be changed without affecting the FOV and the FOV can be changed without affecting the matrix size, but a change in either the matrix size and/or the FOV changes the size of the pixels.

Exposure Indicators

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree