chapter 3 Image Registration and Fusion Techniques

Introduction

Incorporation of information from more than one image study into another image study is encouraged by the desire to use the most complete information available for improved decision making. Typically, image re-gistration and fusion are required in remote-sensing applications (weather forecasting and integrating information into geographic information systems [GIS]), in radiation oncology (combining computed tomography [CT] and nuclear magnetic resonance (NMR) data to obtain more complete information about the patient and monitoring tumor growth), in surgical planning, in cartography (map updating), and in other areas of computer vision.1 The use of image registration and image fusion as well as matching, integration, correlation, and alignment, have appeared interchangeably throughout the literature. The combination of multiple image datasets and integrated display of the data is referred to as image fusion. The overlay of remote-sensing data such as that seen with topographic maps is an example of image fusion. Image registration is the process of spatially aligning two or more image datasets of the same scene taken at different times, from different viewpoints, and/or by different sensors.1 It geometrically aligns two images—the fixed and moving images—by establishing a coordinate transformation between the coordinates of multiple-image spaces. Once the coordinate transformation is known, image fusion may be performed by transfer of any imaging information from one dataset to another. In this chapter, only the definitions of registration and fusion as defined above will be used.

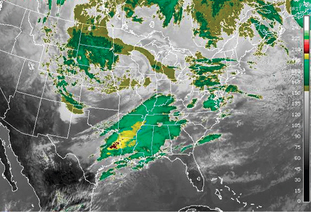

A real-world example of image registration and fusion using a weather map is shown in Figure 3-1. Color-enhanced imagery from satellite data is a method meteorologists use to aid them with satellite interpretation. In Figure 3-1 the satellite imagery data have been registered and fused with the map of the United States, enabling meteorologists to easily and quickly see location of features that are of special interest to them. The combination of geographic information (map) and satellite imagery has enhanced value to meteorologists using image-fusion techniques as opposed to separately interpreting the information. Volume-based image registration methods such as mutual information have been recently investigated for use in the field of GIS.2

(Data from National Oceanic & Atmospheric Administration available at: http://www.weather.gov/sat_tab.php?image=ir Accessed September 19, 2007.)

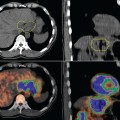

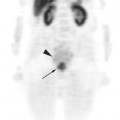

Physicians have understood the importance of fusing information from multiple images for developing a comprehensive diagnostic overview of the patient for some time now. This practice has become even more significant with the growing number of tomograph imaging methods. Differences in image size and orientation, such as different patient positioning and motion artifacts, make it difficult to interpret similar areas of interest in multiple imaging modalities unless the images are registered. There have been a number of reviews of image registration in recent years,3–15 with Hutton and colleagues being focused on nuclear medicine image registration. This chapter focuses on image registration and fusion of positron emission tomography (PET) images with CT and magnetic resonance imaging (MRI) which allows a more accurate analysis of the functional images and facilitates anatomically based interpretation, diagnosis, and surgery planning.16–23 An overview of the various image registration methods are provided with clinical applications using commercially available systems. The objective of this chapter is to present a general overview of medical image registration relevant to nuclear medicine imaging and its application to radiation treatment planning.

Radiotherapy Imaging Systems

CT and MR Simulation

Over the past decade, many radiation oncology departments have incorporated the modern CT simulator into their treatment process. This has alleviated the need to use a conventional X-ray simulator. Commercial CT simulators are available and provide three-dimensional (3D) volumetric radiation therapy techniques to be used on a routine basis in clinical departments.24–31 CT simulation combines some of the functions of an image-based, 3D treatment planning system and conventional simulator. A CT simulator software system re-creates the treatment machine and allows import, manipulation, display, and storage of images from CT. The CT simulation scanner table must have a flat top similar to radiation therapy treatment machines. Besides the flat tabletop, CT scanners used for CT simulation are usually equipped with external patient marking/positioning lasers that can be fixed or mobile.

PET and PET-CT

PET is a molecular imaging modality capable of detecting small concentrations of positron-emitting radioisotopes. It is noted that most PET scanners use curved tabletops as opposed to flat tabletops for CT simulation and radiation therapy. This causes some difficulty in registering PET and CT/MR datasets depending on the patient’s position at the time of the imaging scans. The first combined PET-CT scanner was developed to provide an automated hardware solution for co-registered anatomic and functional images.32 An accurate and precise rigid transformation for co-registering the CT and PET images is determined by carefully aligning the two scanners during installation and measuring their physical offset. This eliminates one of the major limitations of software registration approaches by providing a constant spatial transformation between PET and CT images that is known beforehand and is independent of patient positioning. The underlying assumption is that the patient does not move during the procedure. The accuracy of software-based registration methods is typically on the order of the voxel size of the modality with the lowest spatial resolution. In the case of PET and CT co-registration, this typically corresponds to 4 to 5 mm.33 In contrast the intrinsic registration accuracy of a combined PET-CT scanner is submillimeter.

4D PET-CT

A new method of CT simulation is evolving. This is known as 4D-CT simulation (3D + time = 4D). In one method of 4D-CT simulation, retrospective gating of the CT simulation data is performed using the patient’s respiratory breathing cycle.34 GE Medical Systems (Milwaukee, WI) and Varian Medical Systems (Palo Alto, CA) have developed a system using the RPM™ Respiratory Gating system with a multi-slice CT scanner for analyzing and incorporating intrafraction motion management using tomographic datasets. The system provides retrospective gating of the tomographic dataset by taking 3D datasets at specific time intervals to create a time-dependent 4D-CT imaging study.34 The use of this 4D imaging set allows the physician to accurately define the target and its trajectory with respect to normal anatomy and critical structures. This type of CT simulation tool can then be used with the respiratory gating system at the treatment machine to gate the beam delivery with the patient’s breathing cycle. The use of 4D-CT simulation makes it possible to acquire CT scans that provide new information on the motion of tumors and critical structures. PET gating has been performed with a camera-based patient monitoring system and has shown a volume reduction of tumors by as much as 34%.35 The same method of patient mo-nitoring has also been used to perform gated radiotherapy of liver tumors.36 Respiratory gating is currently being developed for 4D PET-CT.

Integrating PET Imaging into Radiation Treatment Planning

External Beam Radiotherapy Treatment Process

Beam placement and treatment design are performed using virtual simulation software.28–31 The treatment planning portion of the CT simulation process begins with target and normal structure delineation. Other imaging studies, such as secondary CT, MR, PET, may be registered to the CT simulation scan to provide information for an impro-ved target or normal structure delineation. After delineation of the target volumes, a treatment plan is created for delivering the desired prescription using a combination of various beam orientations and shapes. At the treatment machine, the patient is set up according to the treatment plan.

Scenario 3: Diagnostic PET-CT Procedure Followed by CT Simulation. The patient receives a diagnostic PET-CT procedure before the CT simulation procedure. This is performed using diagnostic imaging protocols such as curved tabletops and different patient positioning. After coming to the radiation therapy department, a custom immobilization device is created, and the CT simulation procedure is performed to acquire the treatment planning CT dataset. The PET-CT images are then registered to the planning CT images. Accuracy is difficult to achieve since the patient is scanned in different positions in each system. This is not ideal since many rigid image registration methods fail to correctly register the images. Deformable image registration methods may be more appropriate to warp or stretch the PET-CT images to match the planning CT images. However, at the present time, no commercial imaging or treatment planning vendor provides a deformable image registration algorithm.

From the above scenarios it is clear that there are challenges with scenarios 2 and 3 that limit the accuracy of the image registration algorithms for multi-modality images. One problem is the degree of similarity of the patient’s position and shape during the imaging acquisitions.37 The other issue is the differences in time when acquiring the image datasets. When performing scans in the thorax and abdomen region, motion artifacts will present a problem when registering PET-CT images with planning CT images. Until non-rigid image registration methods are commercially available, this will continue to be a problem. The use of 4D PET-CT may address the motion artifacts encountered by respiration. Currently, scenarios 1 and 2 should be used in the clinical environment since it is important to image the patient in a treatment immobilization device for accurate image registration.

DICOM

The communication system used for transmitting, converting, and associating medical imaging data is the Digital Imaging and Communications in Medicine (DICOM) St-andard.38 This standard describes the methods of formatting and exchanging images and associated information. DICOM relies on industry standard network connections and effectively addresses the communication of digital images such as CT, MR, and PET and radiotherapy (RT) objects. Over the past 5 years, an extension to DICOM has been developed for RT objects and is referred to as DICOM-RT. This extension handles the technical data objects in radiation oncology such as anatomical contours, DRR images, treatment planning data, and dose distribution data. Many CT simulator and treatment planning vendors have begun to adopt DICOM-RT for ensuring a cost-effective solution for sharing technical data in a radiation oncology department. The DICOM-RT objects used for CT simulation are as follows:

Image Registration Methods

Many image registration methods have been implemented based on either geometrical features (point-like anatomic features or surfaces)16,19–22,39,40 or intensity similarity measures (mutual information).41,42 The aim of registration is to establish an exact point-to-point correspondence (coordinate system transformation) between the voxels of the different modalities, making direct comparison possible. Transformations are either rigid or non-rigid (sometimes called curved or elastic). A rigid coordinate transformation occurs only when translations in three orthogonal directions and rotations in three directions are allowed. Non-rigid transformations are more complex with non-linear scaling or warping of one dataset as well as rotation and translations. The image registration procedure is a three-step process, which includes: