Chapter 12 Networking, data and image handling and computing in radiotherapy

Introduction

Computing in radiotherapy has developed as computers have grown more powerful. In 1965, Gordon Moore, co-founder of the computing giant Intel, predicted that microchip complexity would increase exponentially for at least 10 years [1]. In fact, Moore’s law still holds today, with processors doubling in complexity approximately every 2 years. Moore’s law typifies the growth in all aspects of computing. Hard disk drive capacity nearly doubles each year while the cost per gigabyte nearly halves [2].

This growth has consequences for radiotherapy. The useful lifetime of any item of computer software or hardware is going to be fairly short. In the NHS, a lifetime of 5 years is used for IT equipment, compared to 10 years for a linear accelerator [3]. This growth also needs to be considered when planning for long-term needs. It will be more costly to purchase 5 years’ data storage immediately than it will be to buy half now and half in 2 years’ time, at a quarter of the cost.

Benefits and hazards of computerization

The more advanced techniques that we practise would not be possible at all without the assistance of computers. Intensity modulated radiotherapy would be totally unfeasible without computers or data communication. Even simple techniques are enhanced by the use of computers. If data are transferred directly between computers there is less chance of human error. The use of record and verify systems has certainly reduced the number of random human errors at the point of treatment delivery [4].

As systems become more complicated, they are harder to check for errors. It should never be assumed that the computer is correct. Furthermore, computers do not remove the risk of human error altogether. Proper use of a system relies on operators performing tasks correctly. If they do not then incorrect information can be passed down the line and used at a later stage. Where errors do occur in computerized systems they may affect a series of patients or a single patient for every fraction of their treatment [5].

Networking

Networks are commonly described as having a number of layers. In one of the simpler descriptions, the TCP/IP model, four layers are described [6]. In order to understand these layers, we draw a comparison with the postal system (Table 12.1).

Table 12.1 The four network layers of the TCP/IP model

| Layer | Network | Postal |

|---|---|---|

| Link | The infrastructure of cables, switches, modems and so on which allow information to be moved from one place to another | The infrastructure of vehicles and sorting offices which allow post to be moved around |

| Network | The addressing system which allows a single computer to be identified and for data to be routed to it | The addressing system which allows post to be directed to the correct destination |

| Transport | The process of packing the data and sending it into the network. Also the process of receiving data from the network | Taking letters to the post office to send and picking up received letters |

| Application | Defining the data to be sent and interpreting any data received | Writing and reading your post |

Link layer: physical infrastructure

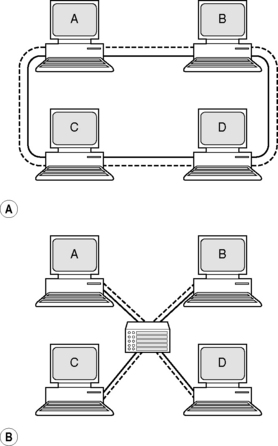

Two computers can be connected by a single cable. But what if four computers need to be connected? It is possible to connect them via a network loop so that a packet of data sent by A is visible to B, C and D, but will only be picked up by the computer it is intended for. The problem with this is that it would be impossible for A and B to communicate at exactly the same time as C and D. The solution is to have each computer connected to a central network switch or hub. The switch only makes connections that are in use and prevents network traffic on one part of a network affecting another part (Figure 12.1).

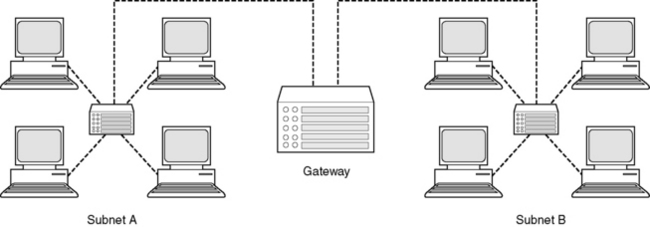

In a very large network, such as a hospital, there will be a large number of such switches, controlling and routing the flow of data in the hospital. In such a large network, the computers may be organized into subnetworks, or subnets. Access between subnets is controlled by ‘gateways’ which can prevent unauthorized access between areas (Figure 12.2).

Network layer: addressing

When you type ‘www.estro.be’ into a web browser, how does your computer connect to the computer that contains the homepage of the European Society for Therapeutic Radiotherapy and Oncology? Each domain name is registered to a computer address called an IP address which consists of four numbers. Your computer initially sends a request to a directory to find out the correct IP address. Then it sends a request to that IP address to retrieve the correct web page.

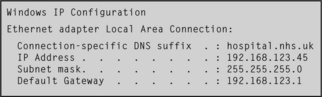

Inside a private network, such as a hospital, computers are still referenced using an IP address. Special ranges of numbers are reserved for use in private networks to avoid confusion with Internet traffic. On many windows computers, it is possible to see the network configuration by typing the command ‘ipconfig’ at a command prompt1. If you do this, and your computer is attached to a network, you will see something like that shown in Figure 12.3.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree